Deck 7: Splunk Core Certified Consultant

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Unlock Deck

Sign up to unlock the cards in this deck!

Unlock Deck

Unlock Deck

1/62

Play

Full screen (f)

Deck 7: Splunk Core Certified Consultant

1

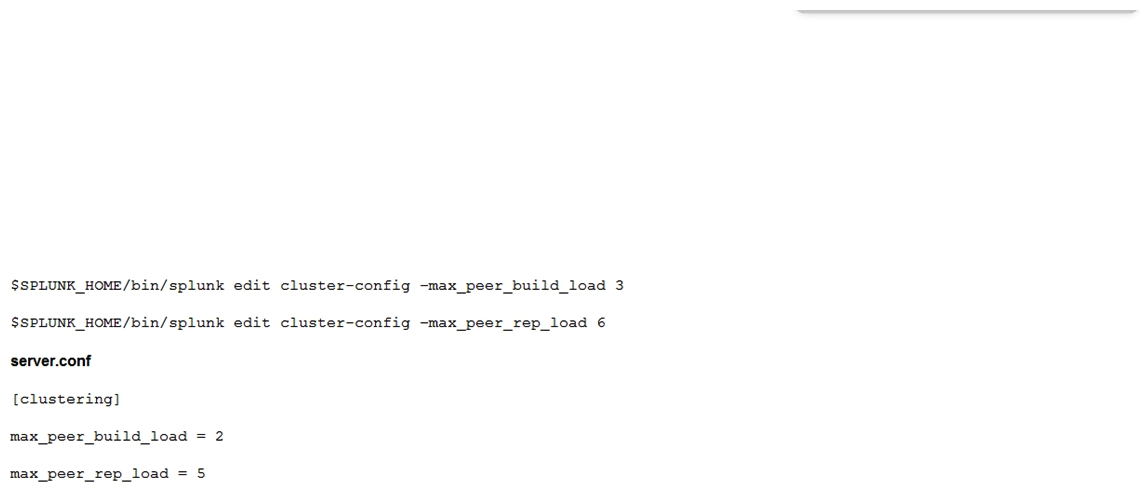

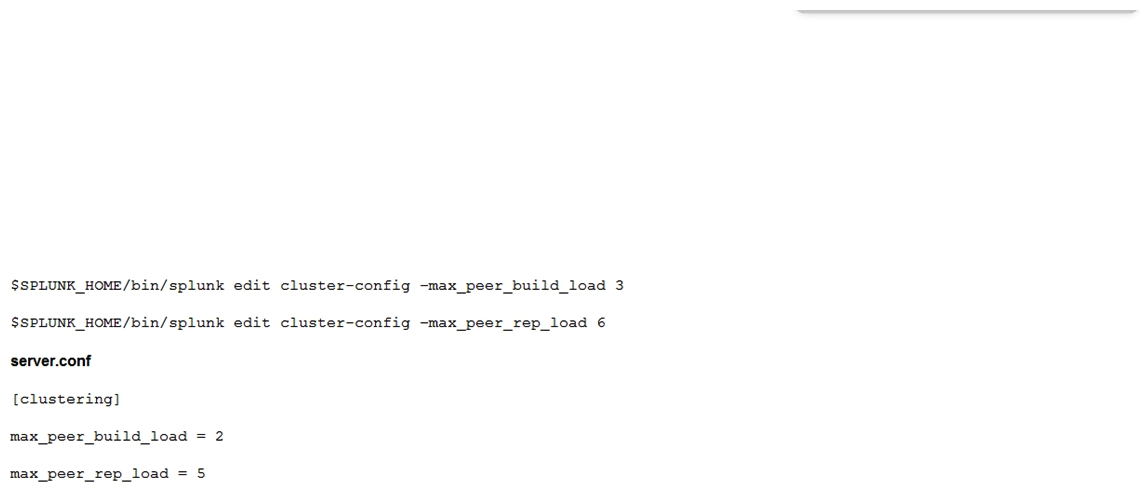

What should be considered when running the following CLI commands with a goal of accelerating an index cluster migration to new hardware?

A) Data ingestion rate

B) Network latency and storage IOPS

C) Distance and location

D) SSL data encryption

A) Data ingestion rate

B) Network latency and storage IOPS

C) Distance and location

D) SSL data encryption

B

2

A customer has a search cluster (SHC) of six members split evenly between two data centers (DC). The customer is concerned with network connectivity between the two DCs due to frequent outages. Which of the following is true as it relates to SHC resiliency when a network outage occurs between the two DCs?

A) The SHC will function as expected as the SHC deployer will become the new captain until the network communication is restored.

B) The SHC will stop all scheduled search activity within the SHC.

C) The SHC will function as expected as the minimum required number of nodes for a SHC is 3.

D) The SHC will function as expected as the SHC captain will fall back to previous active captain in the remaining site.

A) The SHC will function as expected as the SHC deployer will become the new captain until the network communication is restored.

B) The SHC will stop all scheduled search activity within the SHC.

C) The SHC will function as expected as the minimum required number of nodes for a SHC is 3.

D) The SHC will function as expected as the SHC captain will fall back to previous active captain in the remaining site.

D

3

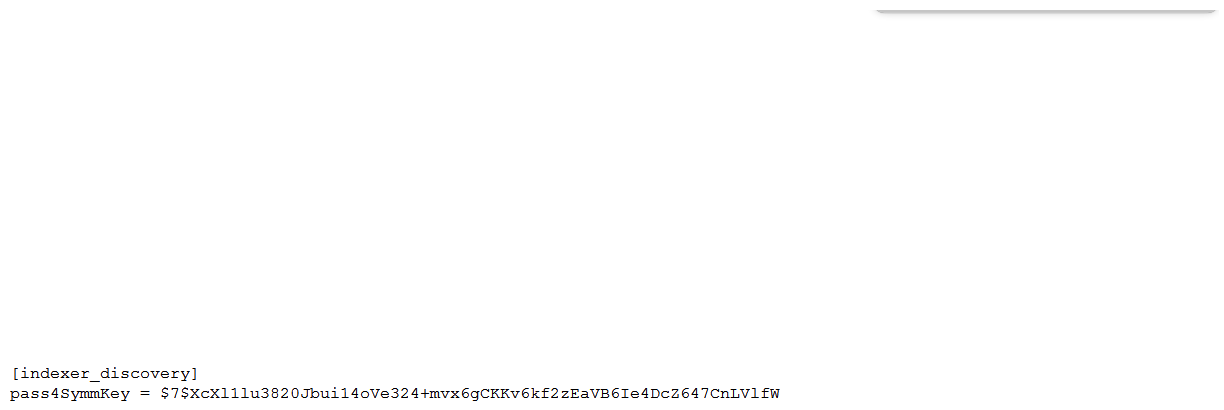

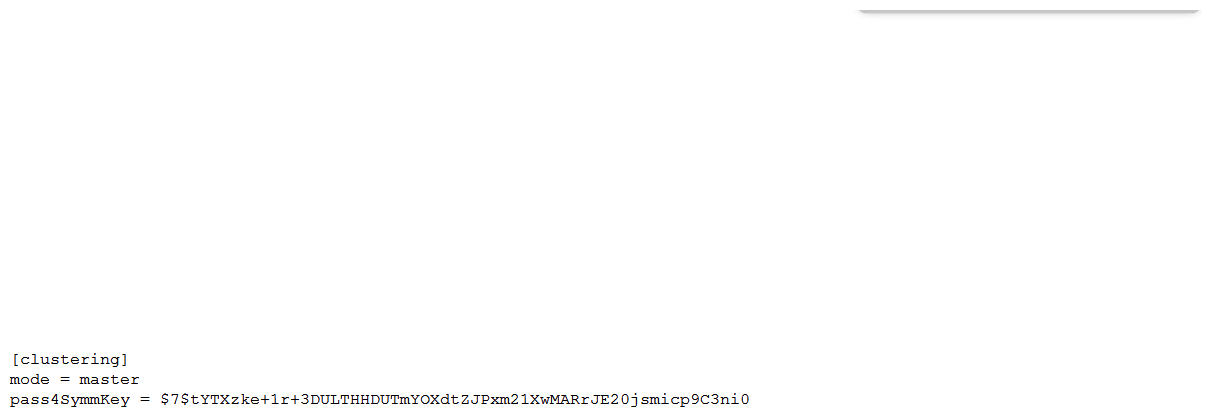

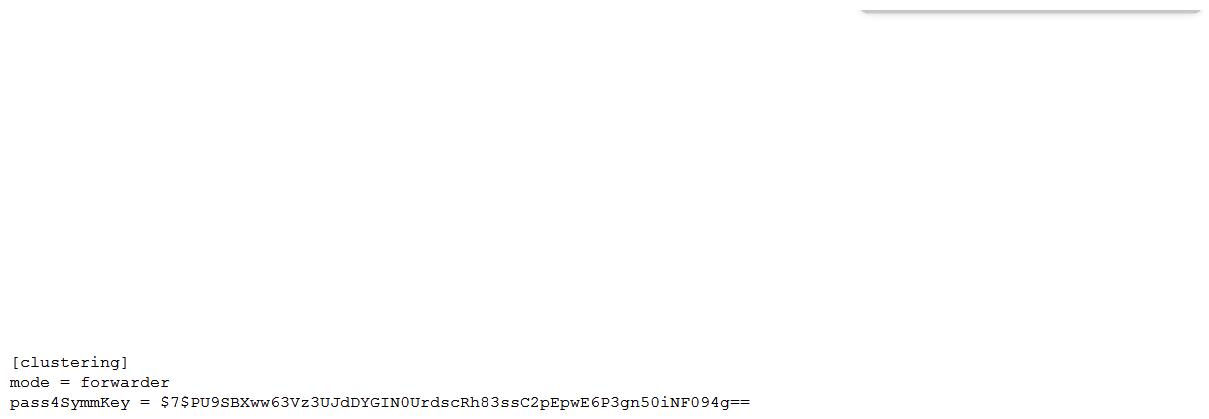

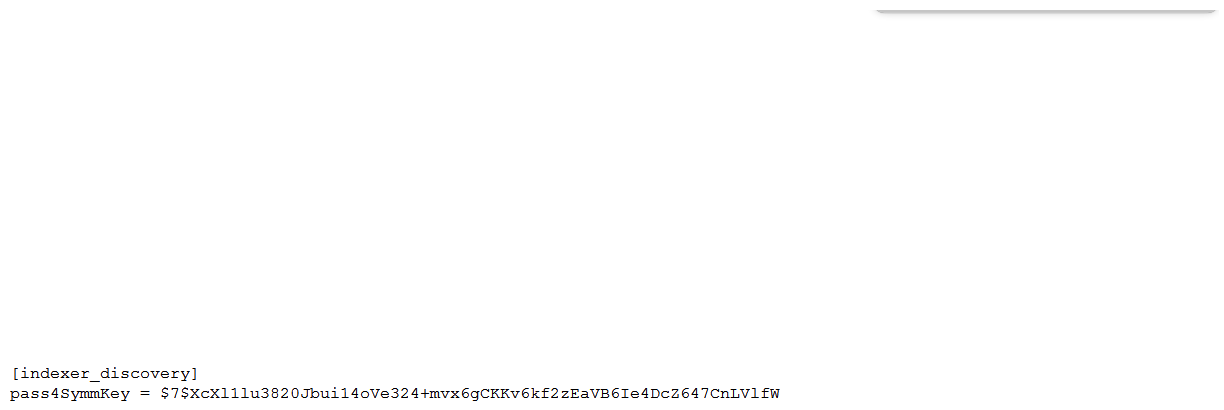

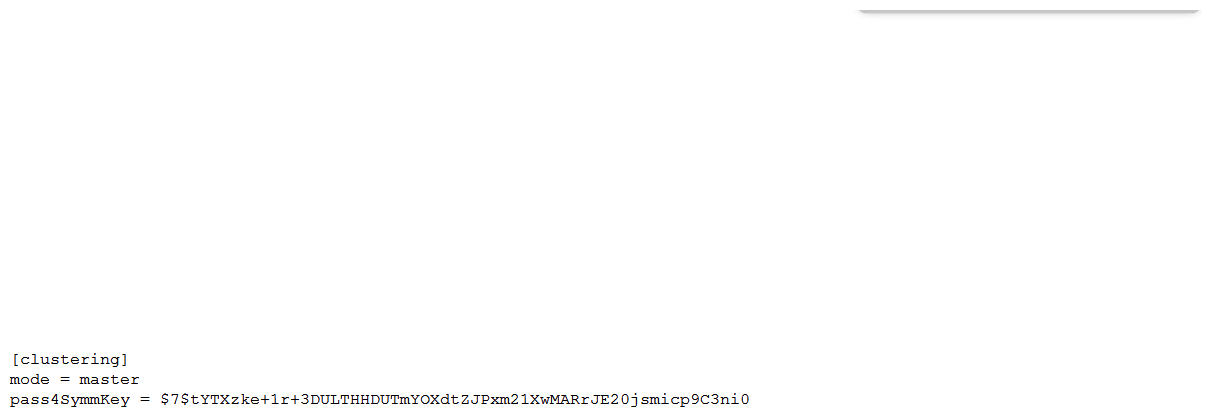

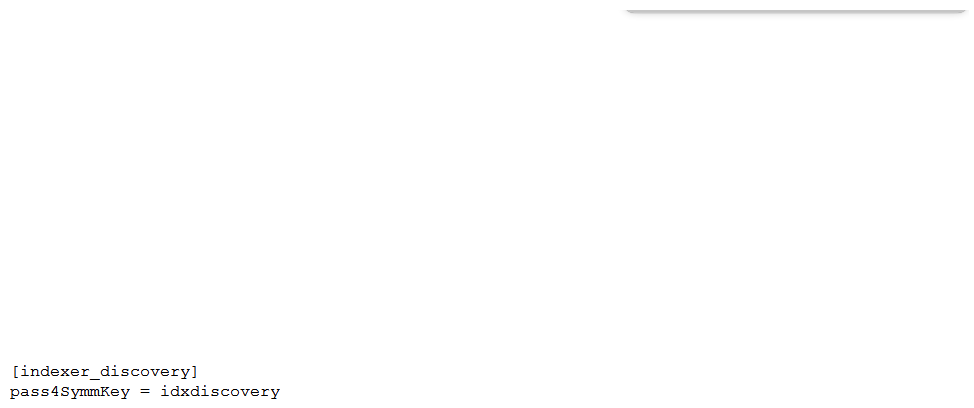

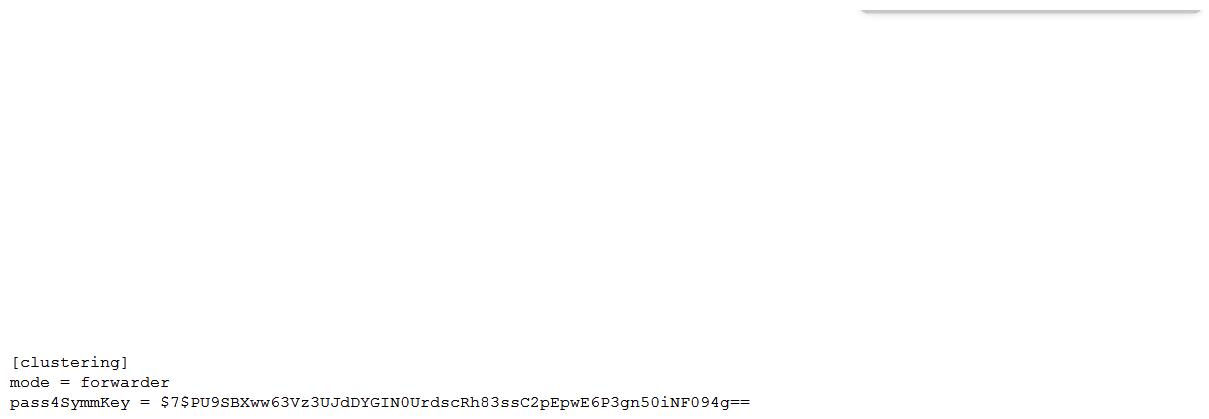

Which of the following server.conf stanzas indicates the Indexer Discovery feature has not been fully configured (restart pending) on the Master Node?

A)

B)

C)

D)

A)

B)

C)

D)

C

4

In a single indexer cluster, where should the Monitoring Console (MC) be installed?

A) Deployer sharing with master cluster.

B) License master that has 50 clients or more.

C) Cluster master node

D) Production Search Head

A) Deployer sharing with master cluster.

B) License master that has 50 clients or more.

C) Cluster master node

D) Production Search Head

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

5

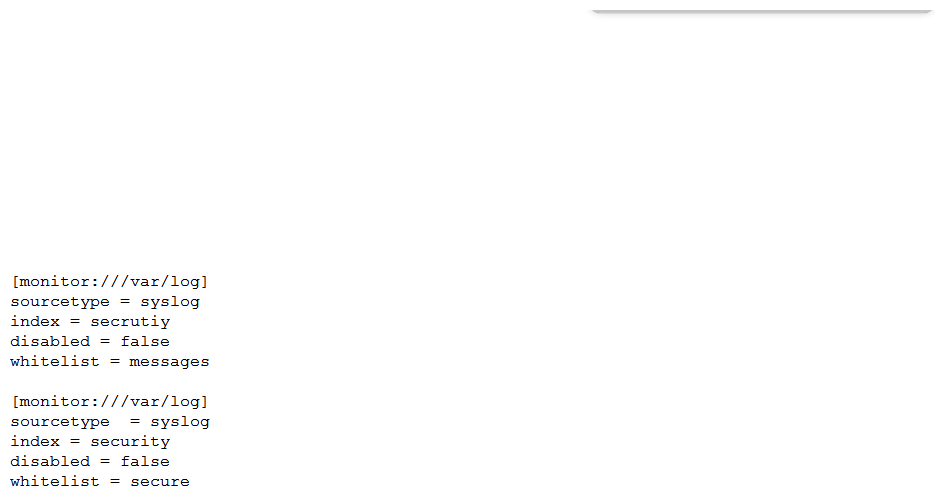

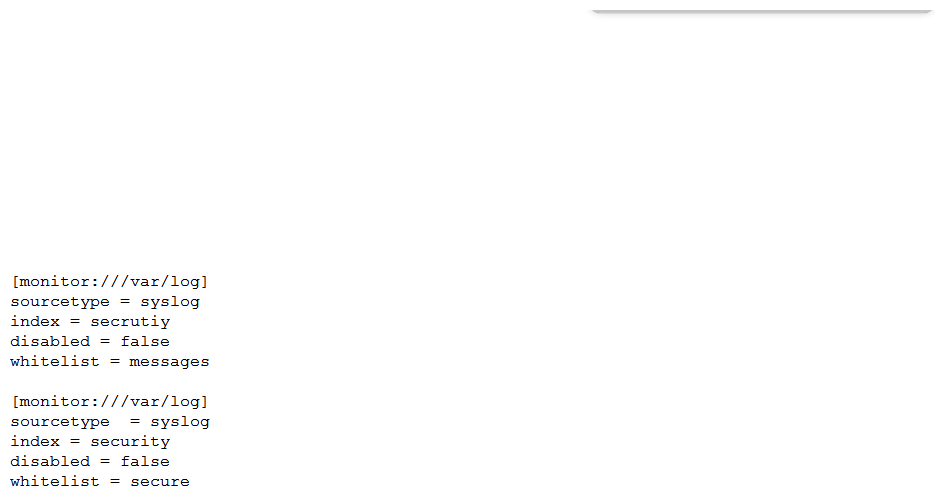

Consider the scenario where the /var/log directory contains the files secure, messages, cron, audit . A customer has created the following inputs.conf stanzas in the same Splunk app in order to attempt to monitor the files secure and messages :  Which file(s) will actually be actively monitored?

Which file(s) will actually be actively monitored?

A) /var/log/secure

B) /var/log/messages

C) /var/log/messages , /var/log/cron , /var/log/audit , /var/log/secure , /var/log/cron /var/log/audit

D) /var/log/secure , /var/log/messages

Which file(s) will actually be actively monitored?

Which file(s) will actually be actively monitored?A) /var/log/secure

B) /var/log/messages

C) /var/log/messages , /var/log/cron , /var/log/audit , /var/log/secure , /var/log/cron /var/log/audit

D) /var/log/secure , /var/log/messages

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

6

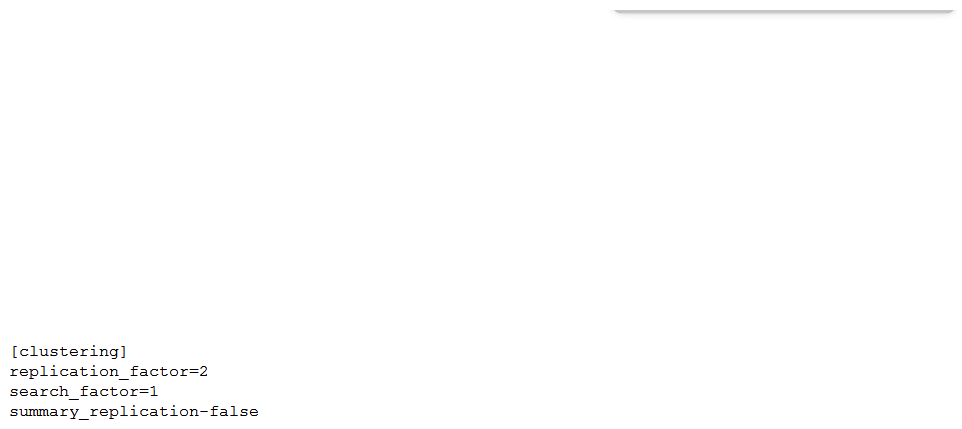

The customer has an indexer cluster supporting a wide variety of search needs, including scheduled search, data model acceleration, and summary indexing. Here is an excerpt from the cluster mater's server.conf :  Which strategy represents the minimum and least disruptive change necessary to protect the searchability of the indexer cluster in case of indexer failure?

Which strategy represents the minimum and least disruptive change necessary to protect the searchability of the indexer cluster in case of indexer failure?

A) Enable maintenance mode on the CM to prevent excessive fix-up and bring the failed indexer back online.

B) Leave replication_factor=2 , increase search_factor=2 and enable summary_replication . Leave replication_factor=2 , increase search_factor=2 and enable summary_replication .

C) Convert the cluster to multi-site and modify the server.conf to be site_replication_factor=2 , site _search_factor=2 . Convert the cluster to multi-site and modify the to be site_replication_factor=2 , site _search_factor=2

D) Increase replication_factor=3 , search_factor=2 to protect the data, and allow there to always be a searchable copy. Increase replication_factor=3 , to protect the data, and allow there to always be a searchable copy.

Which strategy represents the minimum and least disruptive change necessary to protect the searchability of the indexer cluster in case of indexer failure?

Which strategy represents the minimum and least disruptive change necessary to protect the searchability of the indexer cluster in case of indexer failure?A) Enable maintenance mode on the CM to prevent excessive fix-up and bring the failed indexer back online.

B) Leave replication_factor=2 , increase search_factor=2 and enable summary_replication . Leave replication_factor=2 , increase search_factor=2 and enable summary_replication .

C) Convert the cluster to multi-site and modify the server.conf to be site_replication_factor=2 , site _search_factor=2 . Convert the cluster to multi-site and modify the to be site_replication_factor=2 , site _search_factor=2

D) Increase replication_factor=3 , search_factor=2 to protect the data, and allow there to always be a searchable copy. Increase replication_factor=3 , to protect the data, and allow there to always be a searchable copy.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

7

What is the Splunk PS recommendation when using the deployment server and building deployment apps?

A) Carefully design smaller apps with specific configuration that can be reused.

B) Only deploy Splunk PS base configurations via the deployment server.

C) Use $ SPLUNK_HOME/etc/system/local configurations on forwarders and only deploy TAs via the deployment server. Use $ SPLUNK_HOME/etc/system/local configurations on forwarders and only deploy TAs via the deployment server.

D) Carefully design bigger apps containing multiple configs.

A) Carefully design smaller apps with specific configuration that can be reused.

B) Only deploy Splunk PS base configurations via the deployment server.

C) Use $ SPLUNK_HOME/etc/system/local configurations on forwarders and only deploy TAs via the deployment server. Use $ SPLUNK_HOME/etc/system/local configurations on forwarders and only deploy TAs via the deployment server.

D) Carefully design bigger apps containing multiple configs.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

8

How does Monitoring Console (MC) initially identify the server role(s) of a new Splunk Instance?

A) The MC uses a REST endpoint to query the server.

B) Roles are manually assigned within the MC.

C) Roles are read from distsearch.conf . Roles are read from distsearch.conf .

D) The MC assigns all possible roles by default.

A) The MC uses a REST endpoint to query the server.

B) Roles are manually assigned within the MC.

C) Roles are read from distsearch.conf . Roles are read from distsearch.conf .

D) The MC assigns all possible roles by default.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

9

Which statement is true about subsearches?

A) Subsearches are faster than other types of searches.

B) Subsearches work best for joining two large result sets.

C) Subsearches run at the same time as their outer search.

D) Subsearches work best for small result sets.

A) Subsearches are faster than other types of searches.

B) Subsearches work best for joining two large result sets.

C) Subsearches run at the same time as their outer search.

D) Subsearches work best for small result sets.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

10

A customer wants to implement LDAP because managing local Splunk users is becoming too much of an overhead. What configuration details are needed from the customer to implement LDAP authentication?

A) API: Python script with PAM/RADIUS details.

B) LDAP server: port, bind user credentials, path/to/groups, path/to/ user.

C) LDAP server: port, bind user credentials, base DN for groups, base DN for users.

D) LDAP REST details, base DN for groups, base DN for users.

A) API: Python script with PAM/RADIUS details.

B) LDAP server: port, bind user credentials, path/to/groups, path/to/ user.

C) LDAP server: port, bind user credentials, base DN for groups, base DN for users.

D) LDAP REST details, base DN for groups, base DN for users.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

11

A customer wants to understand how Splunk bucket types (hot, warm, cold) impact search performance within their environment. Their indexers have a single storage device for all data. What is the proper message to communicate to the customer?

A) The bucket types (hot, warm, or cold) have the same search performance characteristics within the customer's environment.

B) While hot, warm, and cold buckets have the same search performance characteristics within the customers environment, due to their optimized structure, the thawed buckets are the most performant.

C) Searching hot and warm buckets result in best performance because by default the cold buckets are miniaturized by removing TSIDX files to save on storage cost.

D) Because the cold buckets are written to a cheaper/slower storage volume, they will be slower to search compared to hot and warm buckets which are written to Solid State Disk (SSD).

A) The bucket types (hot, warm, or cold) have the same search performance characteristics within the customer's environment.

B) While hot, warm, and cold buckets have the same search performance characteristics within the customers environment, due to their optimized structure, the thawed buckets are the most performant.

C) Searching hot and warm buckets result in best performance because by default the cold buckets are miniaturized by removing TSIDX files to save on storage cost.

D) Because the cold buckets are written to a cheaper/slower storage volume, they will be slower to search compared to hot and warm buckets which are written to Solid State Disk (SSD).

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

12

The customer wants to migrate their current Splunk Index cluster to new hardware to improve indexing and search performance. What is the correct process and procedure for this task?

A) 1. Install new indexers. 2. Configure indexers into the cluster as peers; ensure they receive the same configuration via the deployment server. 3. Decommission old peers one at a time. 4. Remove old peers from the CM's list. 5. Update forwarders to forward to the new peers.

B) 2. Configure indexers into the cluster as peers; ensure they receive the cluster bundle and the same configuration as original peers.

C) 3. Update forwarders to forward to the new peers. 4. Decommission old peers on at a time. 5. Restart the cluster master (CM).

D) 4. Decommission old peers one at a time. 5. Remove old peers from the CM's list.

A) 1. Install new indexers. 2. Configure indexers into the cluster as peers; ensure they receive the same configuration via the deployment server. 3. Decommission old peers one at a time. 4. Remove old peers from the CM's list. 5. Update forwarders to forward to the new peers.

B) 2. Configure indexers into the cluster as peers; ensure they receive the cluster bundle and the same configuration as original peers.

C) 3. Update forwarders to forward to the new peers. 4. Decommission old peers on at a time. 5. Restart the cluster master (CM).

D) 4. Decommission old peers one at a time. 5. Remove old peers from the CM's list.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

13

A customer has a new set of hardware to replace their aging indexers. What method would reduce the amount of bucket replication operations during the migration process?

A) Disable the indexing ports on the old indexers.

B) Disable replication ports on the old indexers.

C) Put the old indexers into manual detention.

D) Put the old indexers into automatic detention.

A) Disable the indexing ports on the old indexers.

B) Disable replication ports on the old indexers.

C) Put the old indexers into manual detention.

D) Put the old indexers into automatic detention.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

14

What is the primary driver behind implementing indexer clustering in a customer's environment?

A) To improve resiliency as the search load increases.

B) To reduce indexing latency.

C) To scale out a Splunk environment to offer higher performance capability.

D) To provide higher availability for buckets of data.

A) To improve resiliency as the search load increases.

B) To reduce indexing latency.

C) To scale out a Splunk environment to offer higher performance capability.

D) To provide higher availability for buckets of data.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

15

A [script://] input sends data to a Splunk forwarder using which method?

A) UDP stream

B) TCP stream

C) Temporary file

D) STDOUT/STDERR

A) UDP stream

B) TCP stream

C) Temporary file

D) STDOUT/STDERR

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

16

In which of the following scenarios should base configurations be used to provide consistent, repeatable, and supportable configurations?

A) For non-production environments to keep their configurations in sync.

B) To ensure every customer has exactly the same base settings.

C) To provide settings that do not need to be customized to meet customer requirements.

D) To provide settings that can be customized to meet customer requirements.

A) For non-production environments to keep their configurations in sync.

B) To ensure every customer has exactly the same base settings.

C) To provide settings that do not need to be customized to meet customer requirements.

D) To provide settings that can be customized to meet customer requirements.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

17

A customer's deployment server is overwhelmed with forwarder connections after adding an additional 1000 clients. The default phone home interval is set to 60 seconds. To reduce the number of connection failures to the DS what is recommended?

A) Create a tiered deployment server topology.

B) Reduce the phone home interval to 6 seconds.

C) Leave the phone home interval at 60 seconds.

D) Increase the phone home interval to 600 seconds.

A) Create a tiered deployment server topology.

B) Reduce the phone home interval to 6 seconds.

C) Leave the phone home interval at 60 seconds.

D) Increase the phone home interval to 600 seconds.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

18

Which configuration item should be set to false to significantly improve data ingestion performance?

A) AUTO_KV_JSON

B) BREAK_ONLY_BEFORE_DATE

C) SHOULD_LINEMERGE

D) ANNOTATE_PUNCT

A) AUTO_KV_JSON

B) BREAK_ONLY_BEFORE_DATE

C) SHOULD_LINEMERGE

D) ANNOTATE_PUNCT

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

19

Data can be onboarded using apps, Splunk Web, or the CLI. Which is the PS preferred method?

A) Create UDP input port 9997 on a UF.

B) Use the add data wizard in Splunk Web.

C) Use the inputs.conf file. Use the inputs.conf file.

D) Use a scripted input to monitor a log file.

A) Create UDP input port 9997 on a UF.

B) Use the add data wizard in Splunk Web.

C) Use the inputs.conf file. Use the inputs.conf file.

D) Use a scripted input to monitor a log file.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

20

A customer has asked for a five-node search head cluster (SHC), but does not have the storage budget to use a replication factor greater than 2. They would like to understand what might happen in terms of the users' ability to view historic scheduled search results if they log onto a search head which doesn't contain one of the 2 copies of a given search artifact. Which of the following statements best describes what would happen in this scenario?

A) The search head that the user has logged onto will proxy the required artifact over to itself from a search head that currently holds a copy. A copy will also be replicated from that search head permanently, so it is available for future use.

B) Because the dispatch folder containing the search results is not present on the search head, the user will not be able to view the search results.

C) The user will not be able to see the results of the search until one of the search heads is restarted, forcing synchronization of all dispatched artifacts across all search heads.

D) The user will not be able to see the results of the search until the Splunk administrator issues the apply shcluster-bundle command on the search head deployer, forcing synchronization of all dispatched artifacts across all search heads. The user will not be able to see the results of the search until the Splunk administrator issues the apply shcluster-bundle command on the search head deployer, forcing synchronization of all dispatched artifacts across all search heads.

A) The search head that the user has logged onto will proxy the required artifact over to itself from a search head that currently holds a copy. A copy will also be replicated from that search head permanently, so it is available for future use.

B) Because the dispatch folder containing the search results is not present on the search head, the user will not be able to view the search results.

C) The user will not be able to see the results of the search until one of the search heads is restarted, forcing synchronization of all dispatched artifacts across all search heads.

D) The user will not be able to see the results of the search until the Splunk administrator issues the apply shcluster-bundle command on the search head deployer, forcing synchronization of all dispatched artifacts across all search heads. The user will not be able to see the results of the search until the Splunk administrator issues the apply shcluster-bundle command on the search head deployer, forcing synchronization of all dispatched artifacts across all search heads.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

21

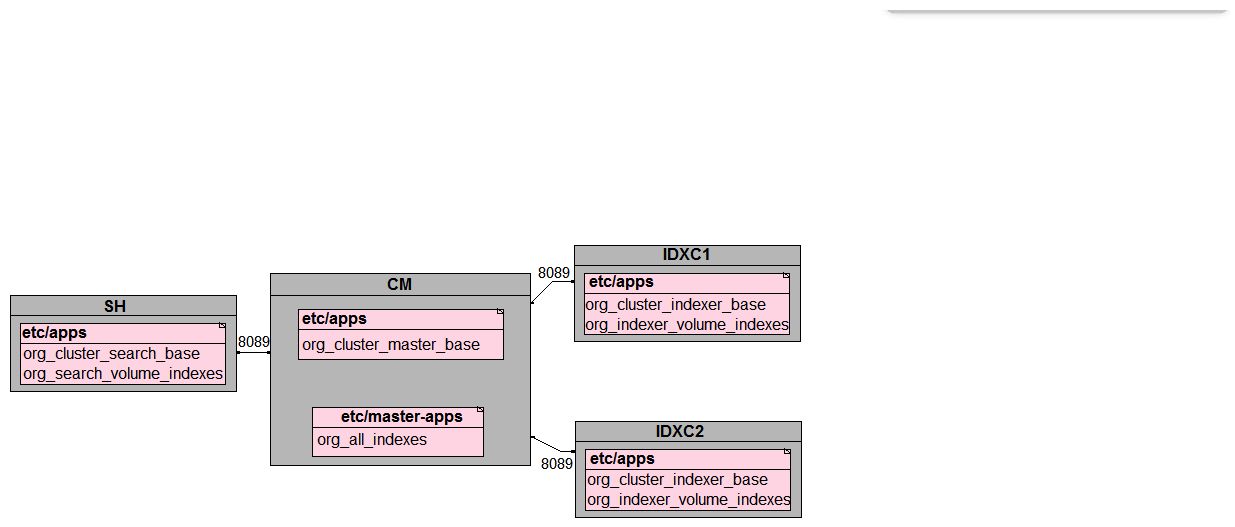

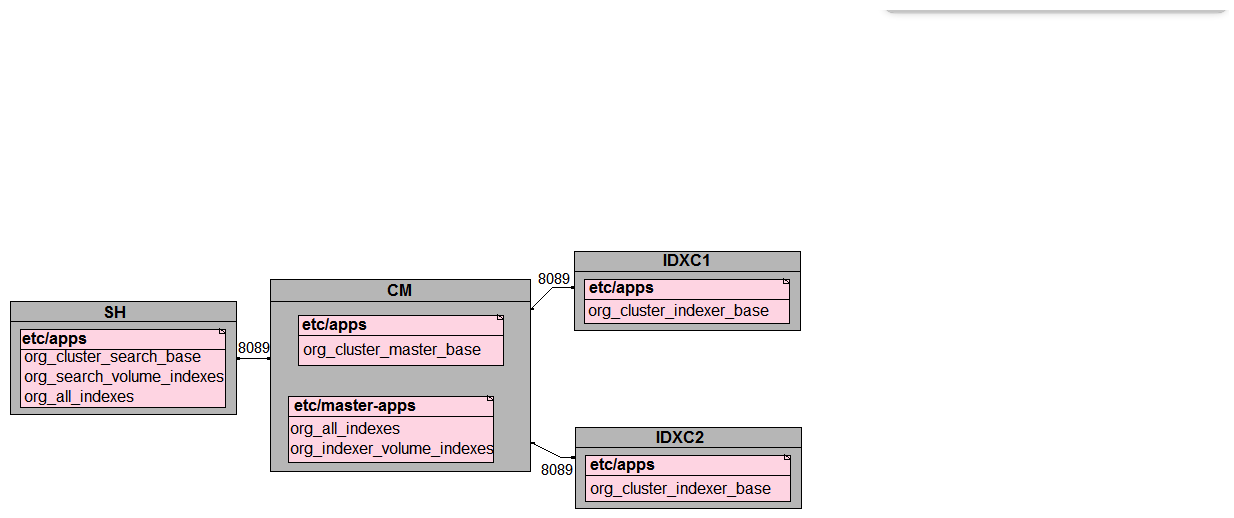

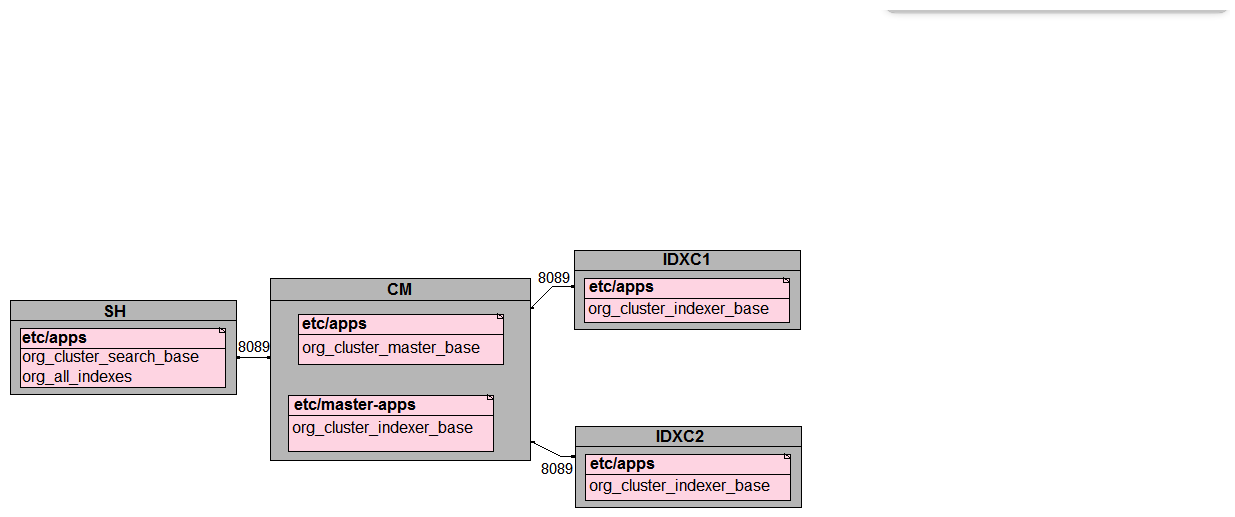

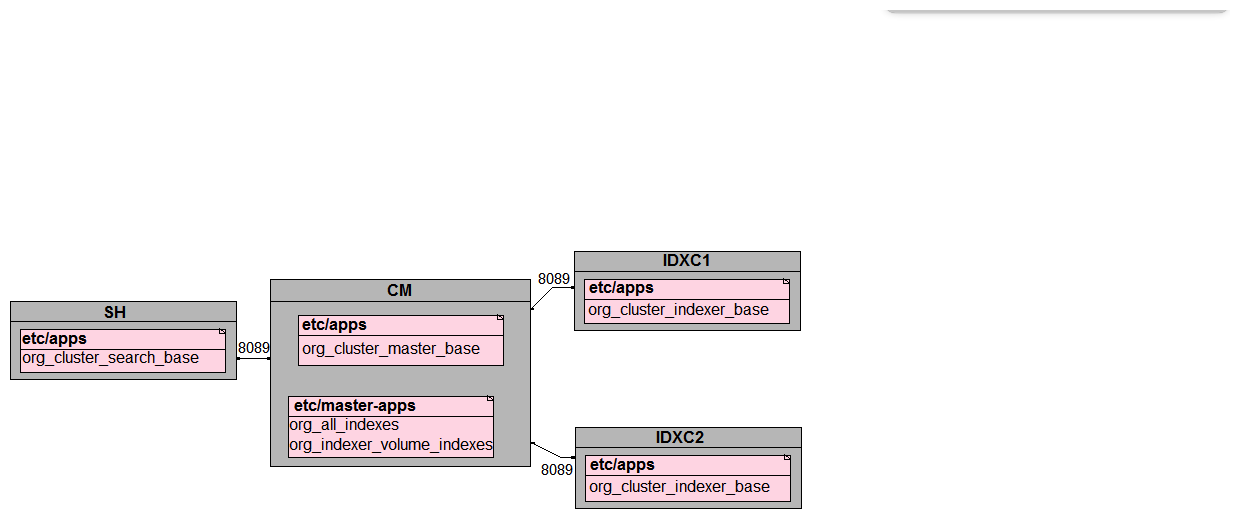

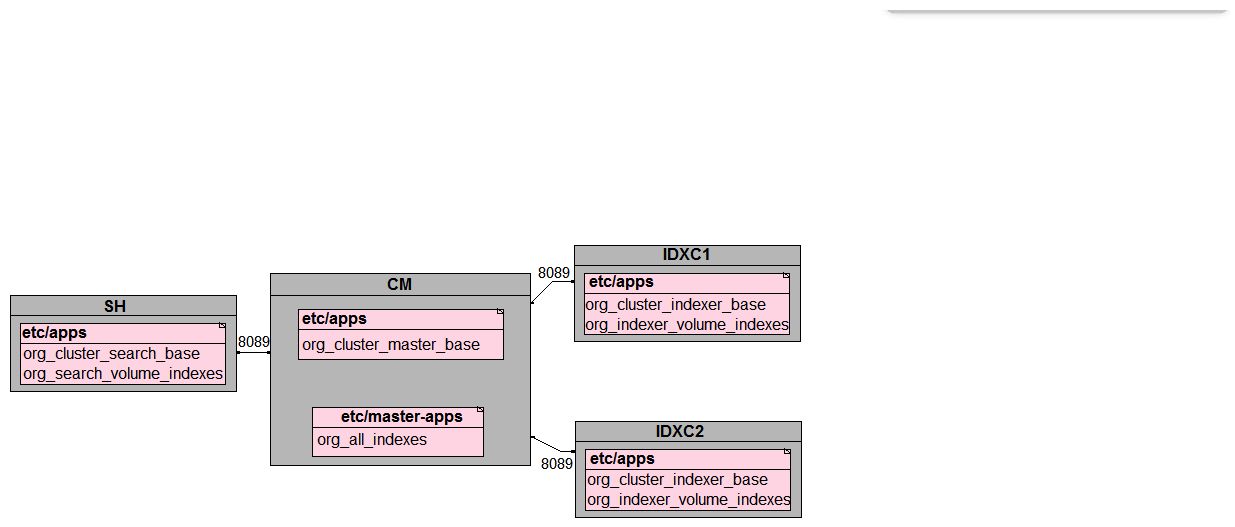

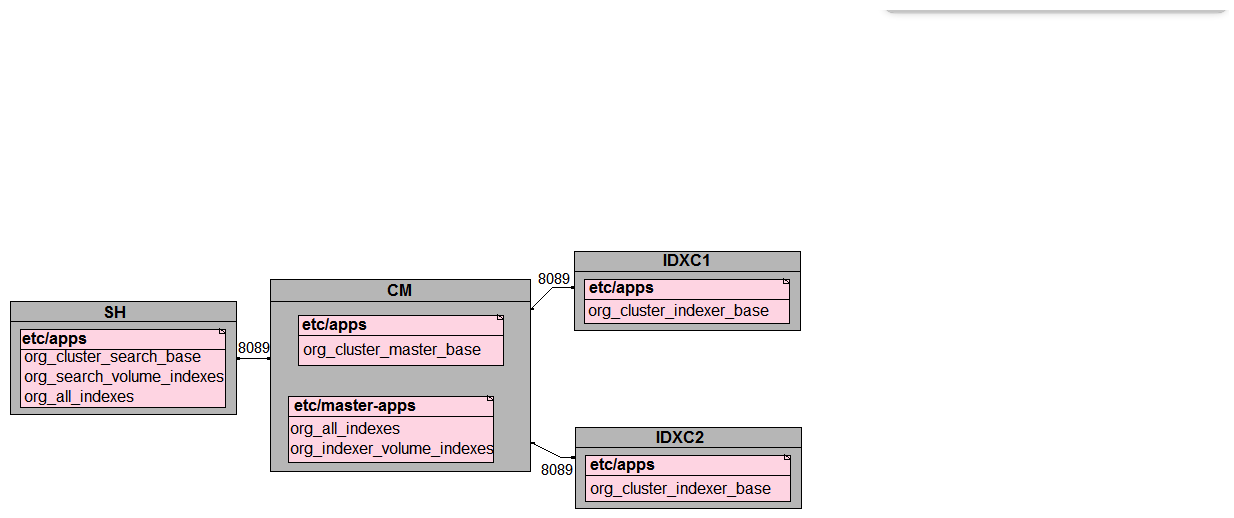

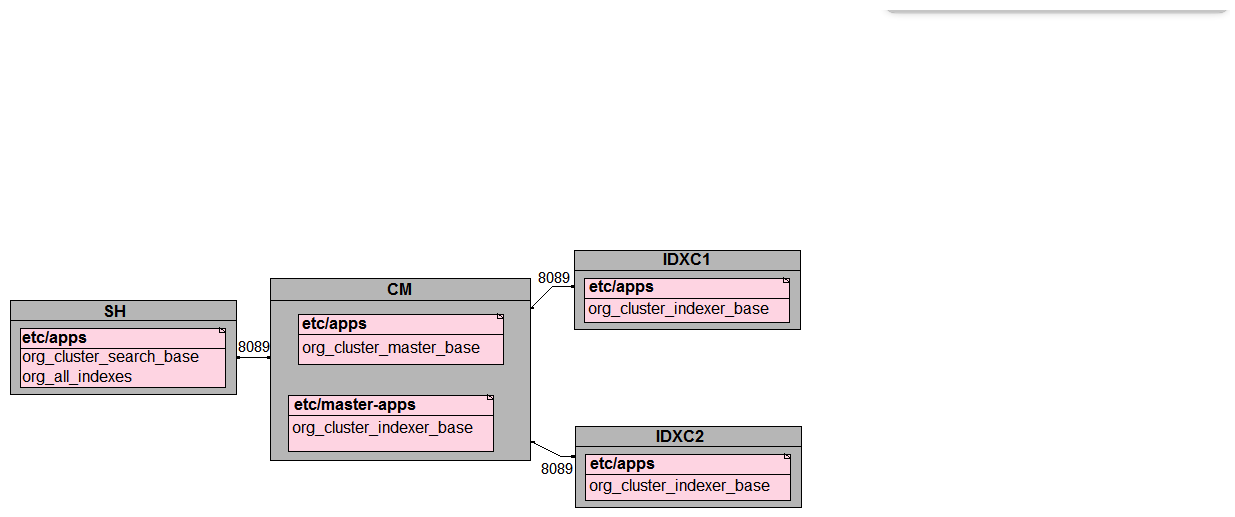

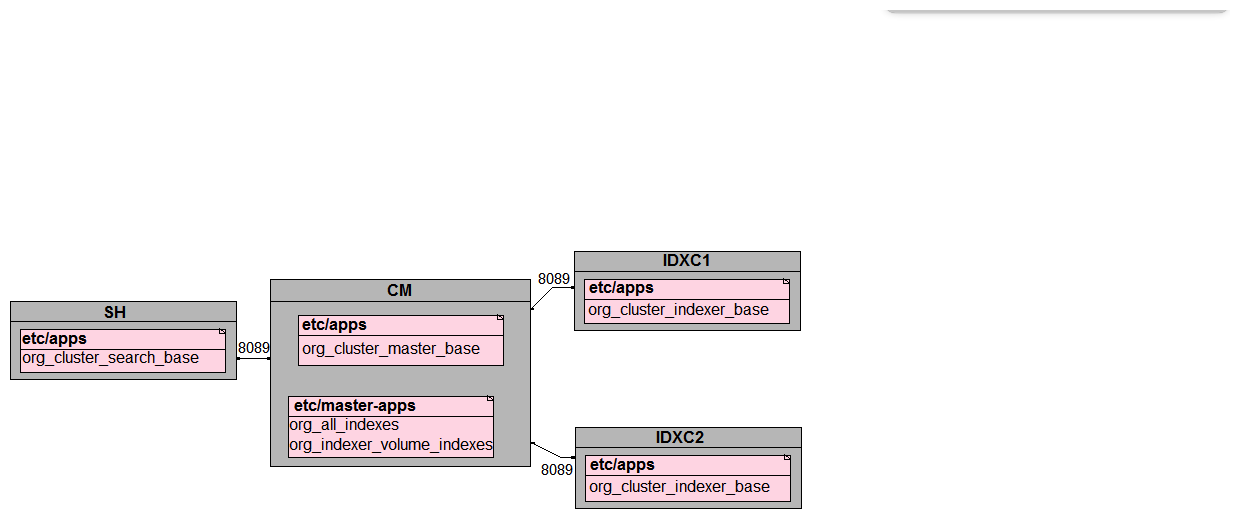

In preparation for the deployment of a new environment for a customer, which of the following mappings are correct per PS best practices?

A)

B)

C)

D)

A)

B)

C)

D)

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

22

What is the default push mode for a search head cluster deployer app configuration bundle?

A) full

B) merge_to_default

C) default_only

D) local_only

A) full

B) merge_to_default

C) default_only

D) local_only

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

23

When setting up a multisite search head and indexer cluster, which nodes are required to declare site membership?

A) Search head cluster members, deployer, indexers, cluster master

B) Search head cluster members, deployment server, deployer, indexers, cluster master

C) All splunk nodes, including forwarders, must declare site membership

D) Search head cluster members, indexers, cluster master

A) Search head cluster members, deployer, indexers, cluster master

B) Search head cluster members, deployment server, deployer, indexers, cluster master

C) All splunk nodes, including forwarders, must declare site membership

D) Search head cluster members, indexers, cluster master

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

24

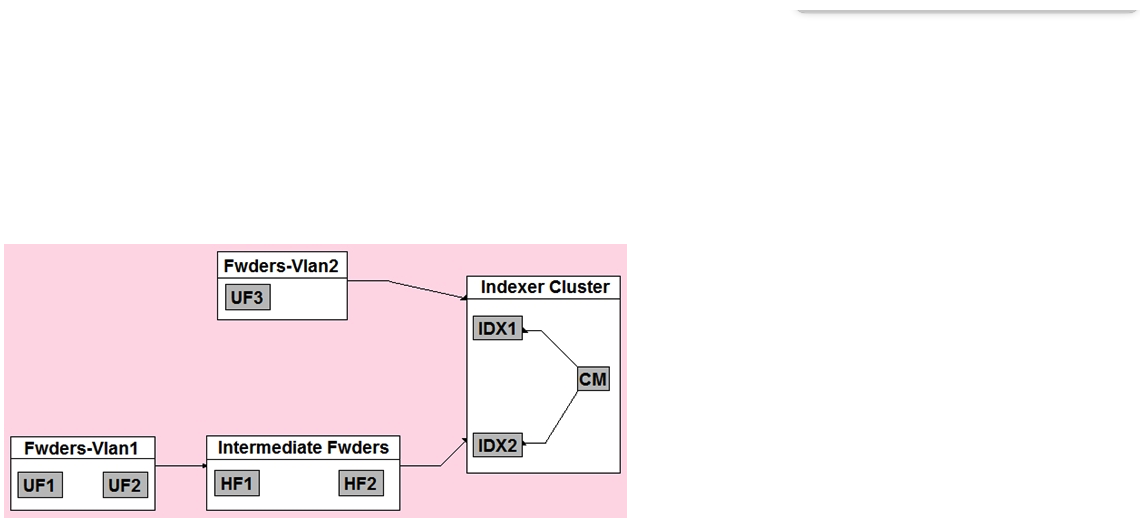

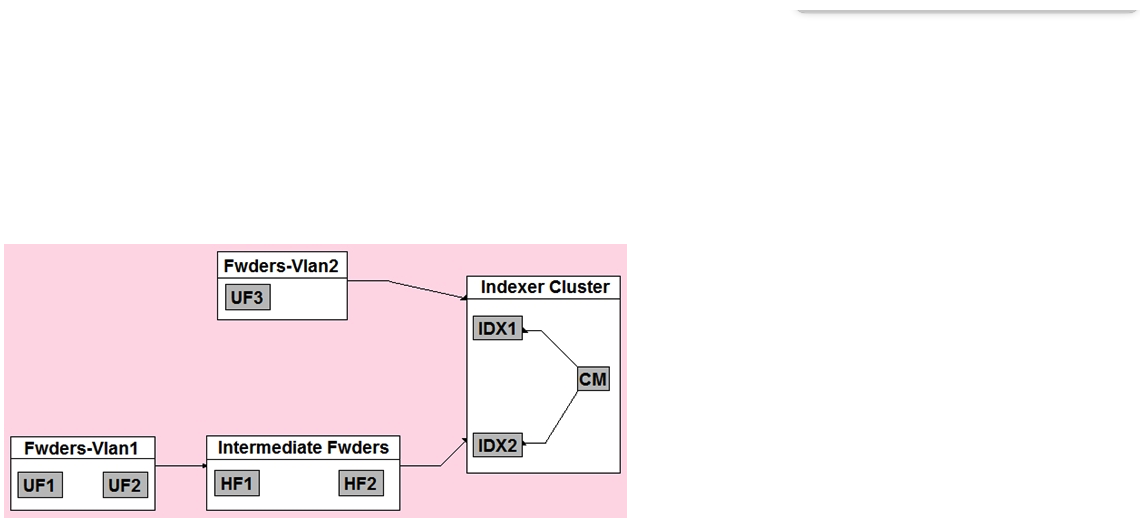

In the diagrammed environment shown below, the customer would like the data read by the universal forwarders to set an indexed field containing the UF's host name. Where would the parsing configurations need to be installed for this to work?

A) All universal forwarders.

B) Only the indexers.

C) All heavy forwarders.

D) On all parsing Splunk instances.

A) All universal forwarders.

B) Only the indexers.

C) All heavy forwarders.

D) On all parsing Splunk instances.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

25

A customer has a network device that transmits logs directly with UDP or TCP over SSL. Using PS best practices, which ingestion method should be used?

A) Open a TCP port with SSL on a heavy forwarder to parse and transmit the data to the indexing tier.

B) Open a UDP port on a universal forwarder to parse and transmit the data to the indexing tier.

C) Use a syslog server to aggregate the data to files and use a heavy forwarder to read and transmit the data to the indexing tier. Use a syslog server to aggregate the data to files and use a heavy forwarder to read and transmit the data to the indexing tier.

D) Use a syslog server to aggregate the data to files and use a universal forwarder to read and transmit the data to the indexing tier. server to aggregate the data to files and use a universal forwarder to read and transmit the data to the indexing tier.

A) Open a TCP port with SSL on a heavy forwarder to parse and transmit the data to the indexing tier.

B) Open a UDP port on a universal forwarder to parse and transmit the data to the indexing tier.

C) Use a syslog server to aggregate the data to files and use a heavy forwarder to read and transmit the data to the indexing tier. Use a syslog server to aggregate the data to files and use a heavy forwarder to read and transmit the data to the indexing tier.

D) Use a syslog server to aggregate the data to files and use a universal forwarder to read and transmit the data to the indexing tier. server to aggregate the data to files and use a universal forwarder to read and transmit the data to the indexing tier.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

26

When utilizing a subsearch within a Splunk SPL search query, which of the following statements is accurate?

A) Subsearches have to be initiated with the | subsearch command. Subsearches have to be initiated with the | subsearch command.

B) Subsearches can only be utilized with | inputlookup command. Subsearches can only be utilized with | inputlookup

C) Subsearches have a default result output limit of 10000.

D) There are no specific limitations when using subsearches.

A) Subsearches have to be initiated with the | subsearch command. Subsearches have to be initiated with the | subsearch command.

B) Subsearches can only be utilized with | inputlookup command. Subsearches can only be utilized with | inputlookup

C) Subsearches have a default result output limit of 10000.

D) There are no specific limitations when using subsearches.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

27

A customer is using regex to whitelist access logs and secure logs from a web server, but only the access logs are being ingested. Which troubleshooting resource would provide insight into why the secure logs are not being ingested?

A) list monitor

B) oneshot

C) btprobe

D) tailingprocessor

A) list monitor

B) oneshot

C) btprobe

D) tailingprocessor

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

28

The Splunk Validated Architectures (SVAs) document provides a series of approved Splunk topologies. Which statement accurately describes how it should be used by a customer?

A) Customer should look at the category tables, pick the highest number that their budget permits, then select this design topology as the chosen design.

B) Customers should identify their requirements, provisionally choose an approved design that meets them, then consider design principles and best practices to come to an informed design decision.

C) Using the guided requirements gathering in the SVAs document, choose a topology that suits requirements, and be sure not to deviate from the specified design.

D) Choose an SVA topology code that includes Search Head and Indexer Clustering because it offers the highest level of resilience.

A) Customer should look at the category tables, pick the highest number that their budget permits, then select this design topology as the chosen design.

B) Customers should identify their requirements, provisionally choose an approved design that meets them, then consider design principles and best practices to come to an informed design decision.

C) Using the guided requirements gathering in the SVAs document, choose a topology that suits requirements, and be sure not to deviate from the specified design.

D) Choose an SVA topology code that includes Search Head and Indexer Clustering because it offers the highest level of resilience.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

29

A customer has a multisite cluster (two sites, each site in its own data center) and users experiencing a slow response when searches are run on search heads located in either site. The Search Job Inspector shows the delay is being caused by search heads on either site waiting for results to be returned by indexers on the opposing site. The network team has confirmed that there is limited bandwidth available between the two data centers, which are in different geographic locations. Which of the following would be the least expensive and easiest way to improve search performance?

A) Configure site_search_factor to ensure a searchable copy exists in the local site for each search head. Configure site_search_factor to ensure a searchable copy exists in the local site for each search head.

B) Move all indexers and search heads in one of the data centers into the same site.

C) Install a network pipe with more bandwidth between the two data centers.

D) Set the site setting on each indexer in the server.conf clustering stanza to be the same for all indexers regardless of site. Set the site setting on each indexer in the server.conf clustering stanza to be the same for all indexers regardless of site.

A) Configure site_search_factor to ensure a searchable copy exists in the local site for each search head. Configure site_search_factor to ensure a searchable copy exists in the local site for each search head.

B) Move all indexers and search heads in one of the data centers into the same site.

C) Install a network pipe with more bandwidth between the two data centers.

D) Set the site setting on each indexer in the server.conf clustering stanza to be the same for all indexers regardless of site. Set the site setting on each indexer in the server.conf clustering stanza to be the same for all indexers regardless of site.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

30

What is required to setup the HTTP Event Collector (HEC)?

A) Each HEC input requires a unique name but token values can be shared.

B) Each HEC input requires an existing forwarder output group.

C) Each HEC input entry must contain a valid token.

D) Each HEC input requires a Source name field.

A) Each HEC input requires a unique name but token values can be shared.

B) Each HEC input requires an existing forwarder output group.

C) Each HEC input entry must contain a valid token.

D) Each HEC input requires a Source name field.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

31

Which command is most efficient in finding the pass4SymmKey of an index cluster?

A) find / -name server.conf -print | grep pass4SymKey

B) $SPLUNK_HOME/bin/splunk search | rest splunk_server=local /servicesNS/-/unhash_app/storage/passwords

C) $SPLUNK_HOME/bin/splunk btool server list clustering | grep pass4SymmKey

D) $SPLUNK_HOME/bin/splunk btool clustering list clustering --debug | grep pass4SymmKey

A) find / -name server.conf -print | grep pass4SymKey

B) $SPLUNK_HOME/bin/splunk search | rest splunk_server=local /servicesNS/-/unhash_app/storage/passwords

C) $SPLUNK_HOME/bin/splunk btool server list clustering | grep pass4SymmKey

D) $SPLUNK_HOME/bin/splunk btool clustering list clustering --debug | grep pass4SymmKey

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

32

Which of the following statements applies to indexer discovery?

A) The Cluster Master (CM) can automatically discover new indexers added to the cluster.

B) Forwarders can automatically discover new indexers added to the cluster.

C) Deployment servers can automatically configure new indexers added to the cluster.

D) Search heads can automatically discover new indexers added to the cluster.

A) The Cluster Master (CM) can automatically discover new indexers added to the cluster.

B) Forwarders can automatically discover new indexers added to the cluster.

C) Deployment servers can automatically configure new indexers added to the cluster.

D) Search heads can automatically discover new indexers added to the cluster.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

33

A customer is migrating their existing Splunk Indexer from an old set of hardware to a new set of indexers. What is the earliest method to migrate the system?

A) 1. Add new indexers to the cluster as peers, in the same site (if needed). 2. Ensure new indexers receive common configuration. 3. Decommission old indexers (one at a time) to allow time for CM to fix/migrate buckets to new hardware. 4. Remove all the old indexers from the CM's list.

B) 1. Add new indexers to the cluster as peers, to a new site. 2. Ensure new indexers receive common configuration from the CM.

C) 1. Add new indexers to the cluster as peers, in the same site. 2. Update the replication factor by +1 to Instruct the cluster to start replicating to new peers. 3. Allow time for CM to fix/migrate buckets to new hardware.

D) 1. Add new indexers to the cluster as new site. 2. Update cluster master (CM) server.conf to include the new available site. 4. Remove the old indexers from the CM's list. 2. Update cluster master (CM) server.conf to include the new available site.

A) 1. Add new indexers to the cluster as peers, in the same site (if needed). 2. Ensure new indexers receive common configuration. 3. Decommission old indexers (one at a time) to allow time for CM to fix/migrate buckets to new hardware. 4. Remove all the old indexers from the CM's list.

B) 1. Add new indexers to the cluster as peers, to a new site. 2. Ensure new indexers receive common configuration from the CM.

C) 1. Add new indexers to the cluster as peers, in the same site. 2. Update the replication factor by +1 to Instruct the cluster to start replicating to new peers. 3. Allow time for CM to fix/migrate buckets to new hardware.

D) 1. Add new indexers to the cluster as new site. 2. Update cluster master (CM) server.conf to include the new available site. 4. Remove the old indexers from the CM's list. 2. Update cluster master (CM) server.conf to include the new available site.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

34

A customer with a large distributed environment has blacklisted a large lookup from the search bundle to decrease the bundle size using distsearch.conf . After this change, when running searches utilizing the that was blacklisted they see error messages in the Splunk Search UI stating the file does not exist. What can the customer do to resolve the issue?

A) The search needs to be modified to ensure the lookup command specifies parameter local=true . The search needs to be modified to ensure the command specifies parameter local=true .

B) The blacklisted lookup definition stanza needs to be modified to specify setting allow_caching=true . The blacklisted definition stanza needs to be modified to specify setting allow_caching=true

C) The search needs to be modified to ensure the lookup command specified parameter blacklist=false . command specified parameter blacklist=false

D) The lookup cannot be blacklisted; the change must be reverted. The cannot be blacklisted; the change must be reverted.

A) The search needs to be modified to ensure the lookup command specifies parameter local=true . The search needs to be modified to ensure the command specifies parameter local=true .

B) The blacklisted lookup definition stanza needs to be modified to specify setting allow_caching=true . The blacklisted definition stanza needs to be modified to specify setting allow_caching=true

C) The search needs to be modified to ensure the lookup command specified parameter blacklist=false . command specified parameter blacklist=false

D) The lookup cannot be blacklisted; the change must be reverted. The cannot be blacklisted; the change must be reverted.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

35

A Splunk Index cluster is being installed and the indexers need to be configured with a license master. After the customer provides the name of the license master, what is the next step?

A) Enter the license master configuration via Splunk web on each indexer before disabling Splunk web.

B) Update /opt/splunk/etc/master-apps/_cluster/default/server.conf on the cluster master and apply a cluster bundle. Update /opt/splunk/etc/master-apps/_cluster/default/server.conf on the cluster master and apply a cluster bundle.

C) Update the Splunk PS base config license app and copy to each indexer.

D) Update the Splunk PS base config license app and deploy via the cluster master.

A) Enter the license master configuration via Splunk web on each indexer before disabling Splunk web.

B) Update /opt/splunk/etc/master-apps/_cluster/default/server.conf on the cluster master and apply a cluster bundle. Update /opt/splunk/etc/master-apps/_cluster/default/server.conf on the cluster master and apply a cluster bundle.

C) Update the Splunk PS base config license app and copy to each indexer.

D) Update the Splunk PS base config license app and deploy via the cluster master.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

36

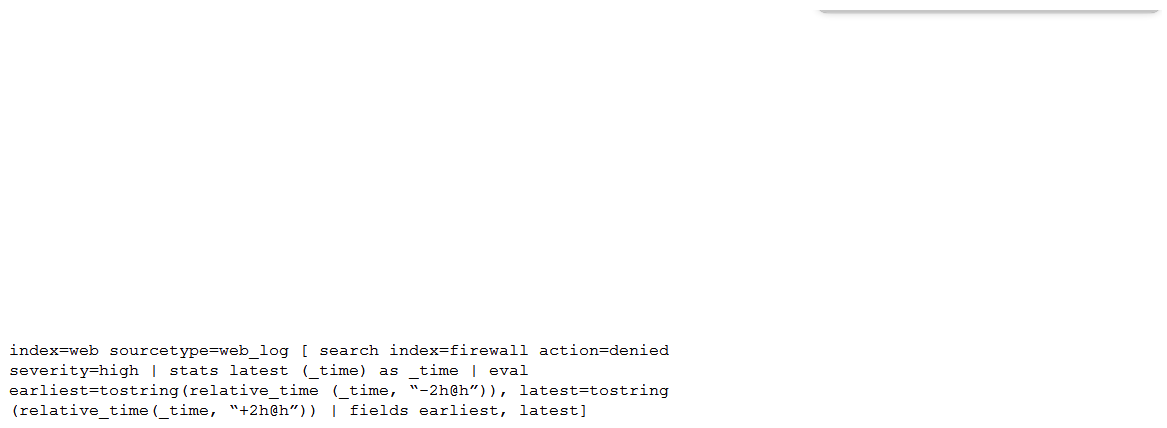

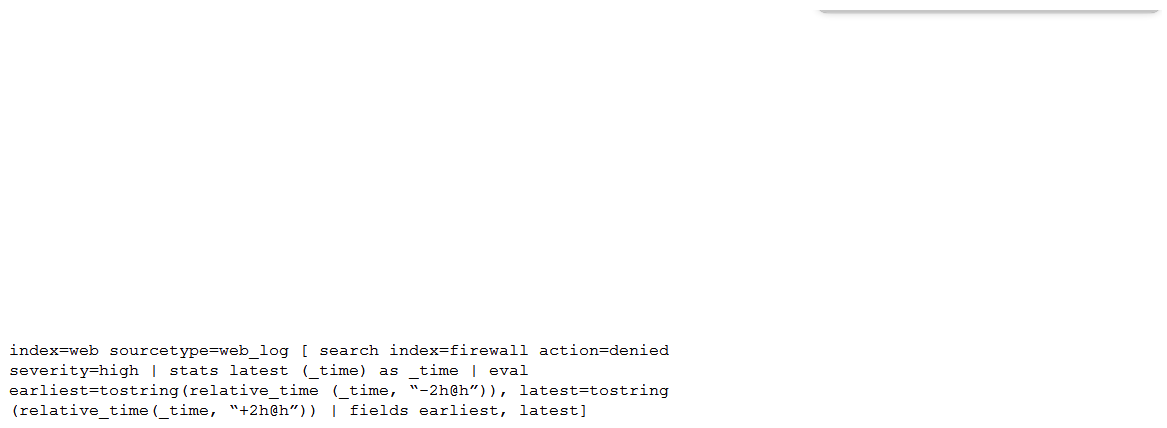

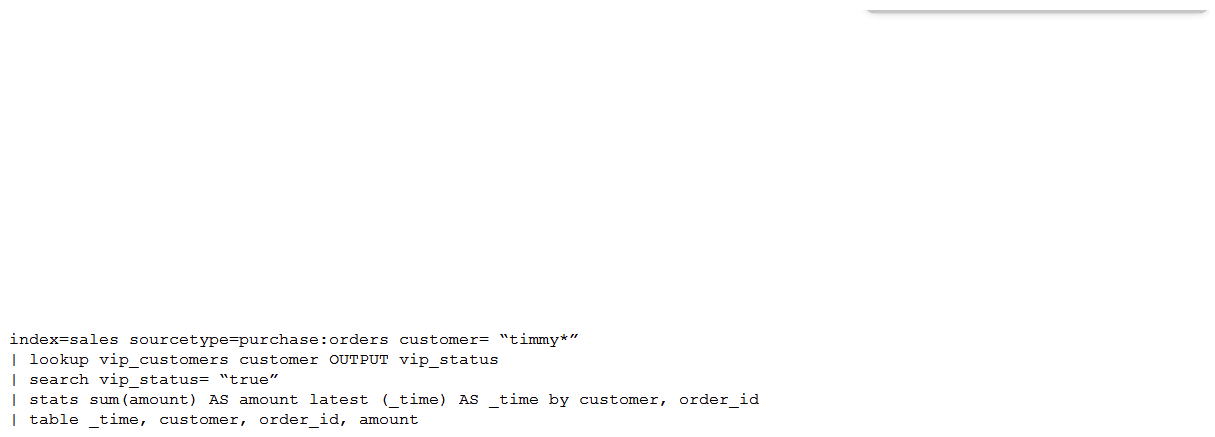

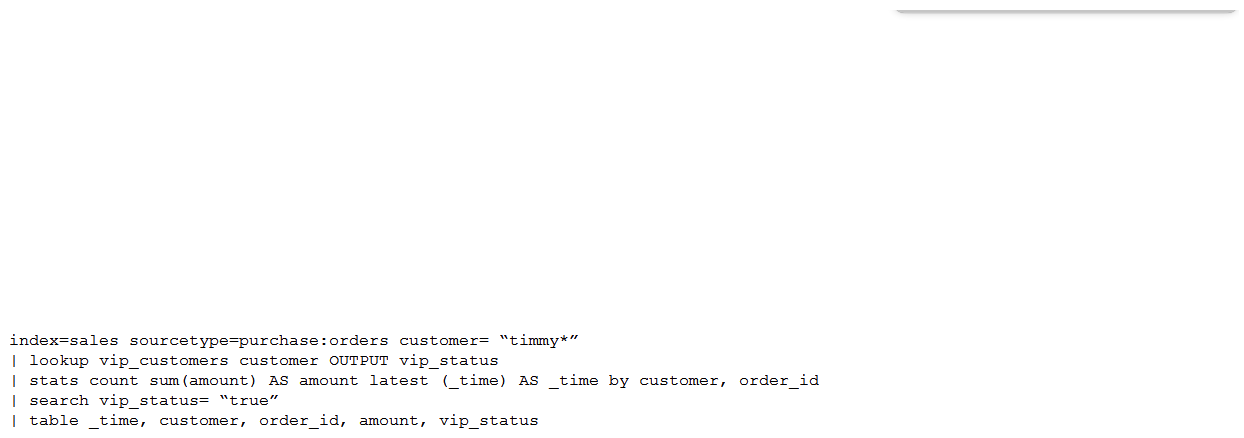

Consider the search shown below.  What is this search's intended function?

What is this search's intended function?

A) To return all the web_log events from the web index that occur two hours before and after the most recent high severity, denied event found in the firewall index. To return all the web_log events from the web index that occur two hours before and after the most recent high severity, denied event found in the firewall index.

B) To find all the denied, high severity events in the firewall index, and use those events to further search for lateral movement within the web index. To find all the denied, high severity events in the index, and use those events to further search for lateral movement within the

C) To return all the web_log events from the web index that occur two hours before and after all high severity, denied events found in the firewall index. index that occur two hours before and after all high severity, denied events found in the

D) To search the firewall index for web logs that have been denied and are of high severity. To search the index for web logs that have been denied and are of high severity.

What is this search's intended function?

What is this search's intended function?A) To return all the web_log events from the web index that occur two hours before and after the most recent high severity, denied event found in the firewall index. To return all the web_log events from the web index that occur two hours before and after the most recent high severity, denied event found in the firewall index.

B) To find all the denied, high severity events in the firewall index, and use those events to further search for lateral movement within the web index. To find all the denied, high severity events in the index, and use those events to further search for lateral movement within the

C) To return all the web_log events from the web index that occur two hours before and after all high severity, denied events found in the firewall index. index that occur two hours before and after all high severity, denied events found in the

D) To search the firewall index for web logs that have been denied and are of high severity. To search the index for web logs that have been denied and are of high severity.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

37

In which directory should base config app(s) be placed to initialize an indexer?

A) $SPLUNK_HOME/etc/

B) $SPLUNK_HOME/etc/apps

C) $SPLUNK_HOME/etc/system/local

D) $SPLUNK_HOME/etc/slave-apps

A) $SPLUNK_HOME/etc/

B) $SPLUNK_HOME/etc/apps

C) $SPLUNK_HOME/etc/system/local

D) $SPLUNK_HOME/etc/slave-apps

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

38

What happens when an index cluster peer freezes a bucket?

A) All indexers with a copy of the bucket will delete it.

B) The cluster master will ensure another copy of the bucket is made on the other peers to meet the replication settings.

C) The cluster master will no longer perform fix-up activities for the bucket.

D) All indexers with a copy of the bucket will immediately roll it to frozen.

A) All indexers with a copy of the bucket will delete it.

B) The cluster master will ensure another copy of the bucket is made on the other peers to meet the replication settings.

C) The cluster master will no longer perform fix-up activities for the bucket.

D) All indexers with a copy of the bucket will immediately roll it to frozen.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

39

When using SAML, where does user authentication occur?

A) Splunk generates a SAML assertion that authenticates the user.

B) The Service Provider (SP) decodes the SAML request and authenticates the user.

C) The Identity Provider (IDP) decodes the SAML request and authenticates the user.

D) The Service Provider (SP) generates a SAML assertion that authenticates the user.

A) Splunk generates a SAML assertion that authenticates the user.

B) The Service Provider (SP) decodes the SAML request and authenticates the user.

C) The Identity Provider (IDP) decodes the SAML request and authenticates the user.

D) The Service Provider (SP) generates a SAML assertion that authenticates the user.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

40

What does Splunk do when it indexes events?

A) Extracts the top 10 fields.

B) Extracts metadata fields such as host , source , sourcetype . Extracts metadata fields such as host , source sourcetype .

C) Performs parsing, merging, and typing processes on universal forwarders.

D) Create report acceleration summaries.

A) Extracts the top 10 fields.

B) Extracts metadata fields such as host , source , sourcetype . Extracts metadata fields such as host , source sourcetype .

C) Performs parsing, merging, and typing processes on universal forwarders.

D) Create report acceleration summaries.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

41

A customer would like to remove the output_file capability from users with the default user role to stop them from filling up the disk on the search head with lookup files. What is the best way to remove this capability from users?

A) Create a new role without the output_file capability that inherits the default user role and assign it to the users. Create a new role without the capability that inherits the default user role and assign it to the users.

B) Create a new role with the output_file capability that inherits the default user role and assign it to the users. Create a new role with the

C) Edit the default user role and remove the output_file capability. Edit the default user role and remove the capability.

D) Clone the default user role, remove the output_file capability, and assign it to the users. Clone the default user role, remove the capability, and assign it to the users.

A) Create a new role without the output_file capability that inherits the default user role and assign it to the users. Create a new role without the capability that inherits the default user role and assign it to the users.

B) Create a new role with the output_file capability that inherits the default user role and assign it to the users. Create a new role with the

C) Edit the default user role and remove the output_file capability. Edit the default user role and remove the capability.

D) Clone the default user role, remove the output_file capability, and assign it to the users. Clone the default user role, remove the capability, and assign it to the users.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

42

In addition to the normal responsibilities of a search head cluster captain, which of the following is a default behavior?

A) The captain is not a cluster member and does not perform normal search activities.

B) The captain is a cluster member who performs normal search activities.

C) The captain is not a cluster member but does perform normal search activities.

D) The captain is a cluster member but does not perform normal search activities.

A) The captain is not a cluster member and does not perform normal search activities.

B) The captain is a cluster member who performs normal search activities.

C) The captain is not a cluster member but does perform normal search activities.

D) The captain is a cluster member but does not perform normal search activities.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

43

A customer has been using Splunk for one year, utilizing a single/all-in-one instance. This single Splunk server is now struggling to cope with the daily ingest rate. Also, Splunk has become a vital system in day-to-day operations making high availability a consideration for the Splunk service. The customer is unsure how to design the new environment topology in order to provide this. Which resource would help the customer gather the requirements for their new architecture?

A) Direct the customer to the docs.splunk.com and tell them that all the information to help them select the right design is documented there.

B) Ask the customer to engage with the sales team immediately as they probably need a larger license.

C) Refer the customer to answers.splunk.com as someone else has probably already designed a system that meets their requirements.

D) Refer the customer to the Splunk Validated Architectures document in order to guide them through which approved architectures could meet their requirements.

A) Direct the customer to the docs.splunk.com and tell them that all the information to help them select the right design is documented there.

B) Ask the customer to engage with the sales team immediately as they probably need a larger license.

C) Refer the customer to answers.splunk.com as someone else has probably already designed a system that meets their requirements.

D) Refer the customer to the Splunk Validated Architectures document in order to guide them through which approved architectures could meet their requirements.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

44

Where does the bloomfilter reside?

A) $SPLUNK_HOME/var/lib/splunk/indexfoo/db/db_1553504858_1553504507_8

B) $SPLUNK_HOME/var/lib/splunk/indexfoo/db/db_1553504858_1553504507_8/*.tsidx

C) $SPLUNK_HOME/var/lib/splunk/fishbucket

D) $SPLUNK_HOME/var/lib/splunk/indexfoo/db/db_1553504858_1553504507_8/rawdata

A) $SPLUNK_HOME/var/lib/splunk/indexfoo/db/db_1553504858_1553504507_8

B) $SPLUNK_HOME/var/lib/splunk/indexfoo/db/db_1553504858_1553504507_8/*.tsidx

C) $SPLUNK_HOME/var/lib/splunk/fishbucket

D) $SPLUNK_HOME/var/lib/splunk/indexfoo/db/db_1553504858_1553504507_8/rawdata

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

45

In a large cloud customer environment with many (>100) dynamically created endpoint systems, each with a UF already deployed, what is the best approach for associating these systems with an appropriate serverclass on the deployment server?

A) Work with the cloud orchestration team to create a common host-naming convention for these systems so a simple pattern can be used in the serverclass.conf whitelist attribute. Work with the cloud orchestration team to create a common host-naming convention for these systems so a simple pattern can be used in the serverclass.conf whitelist attribute.

B) Create a CSV lookup file for each severclass, manually keep track of the endpoints within this CSV file, and leverage the whitelist.from_pathname attribute in serverclass.conf . Create a CSV lookup file for each severclass, manually keep track of the endpoints within this CSV file, and leverage the whitelist.from_pathname attribute in .

C) Work with the cloud orchestration team to dynamically insert an appropriate clientName setting into each endpoint's local/deploymentclient.conf which can be matched by whitelist in serverclass.conf . Work with the cloud orchestration team to dynamically insert an appropriate clientName setting into each endpoint's local/deploymentclient.conf which can be matched by whitelist in

D) Using an installation bootstrap script run a CLI command to assign a clientName setting and permit serverclass.conf whitelist simplification. Using an installation bootstrap script run a CLI command to assign a setting and permit whitelist simplification.

A) Work with the cloud orchestration team to create a common host-naming convention for these systems so a simple pattern can be used in the serverclass.conf whitelist attribute. Work with the cloud orchestration team to create a common host-naming convention for these systems so a simple pattern can be used in the serverclass.conf whitelist attribute.

B) Create a CSV lookup file for each severclass, manually keep track of the endpoints within this CSV file, and leverage the whitelist.from_pathname attribute in serverclass.conf . Create a CSV lookup file for each severclass, manually keep track of the endpoints within this CSV file, and leverage the whitelist.from_pathname attribute in .

C) Work with the cloud orchestration team to dynamically insert an appropriate clientName setting into each endpoint's local/deploymentclient.conf which can be matched by whitelist in serverclass.conf . Work with the cloud orchestration team to dynamically insert an appropriate clientName setting into each endpoint's local/deploymentclient.conf which can be matched by whitelist in

D) Using an installation bootstrap script run a CLI command to assign a clientName setting and permit serverclass.conf whitelist simplification. Using an installation bootstrap script run a CLI command to assign a setting and permit whitelist simplification.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

46

What happens to the indexer cluster when the indexer Cluster Master (CM) runs out of disk space?

A) A warm standby CM needs to be brought online as soon as possible before an indexer has an outage.

B) The indexer cluster will continue to operate as long as no indexers fail.

C) If the indexer cluster has site failover configured in the CM, the second cluster master will take over.

D) The indexer cluster will continue to operate as long as a replacement CM is deployed within 24 hours.

A) A warm standby CM needs to be brought online as soon as possible before an indexer has an outage.

B) The indexer cluster will continue to operate as long as no indexers fail.

C) If the indexer cluster has site failover configured in the CM, the second cluster master will take over.

D) The indexer cluster will continue to operate as long as a replacement CM is deployed within 24 hours.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

47

Which of the following processor occur in the indexing pipeline?

A) tcp out, syslog out

B) Regex replacement, annotator

C) Aggregator

D) UTF-8, linebreaker, header

A) tcp out, syslog out

B) Regex replacement, annotator

C) Aggregator

D) UTF-8, linebreaker, header

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

48

In which of the following scenarios is a subsearch the most appropriate?

A) When joining results from multiple indexes.

B) When dynamically filtering hosts.

C) When filtering indexed fields.

D) When joining multiple large datasets.

A) When joining results from multiple indexes.

B) When dynamically filtering hosts.

C) When filtering indexed fields.

D) When joining multiple large datasets.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

49

When a bucket rolls from cold to frozen on a clustered indexer, which of the following scenarios occurs?

A) All replicated copies will be rolled to frozen; original copies will remain.

B) Replicated copies of the bucket will remain on all other indexers and the Cluster Master (CM) assigns a new primary bucket.

C) The bucket rolls to frozen on all clustered indexers simultaneously.

D) Nothing. Replicated copies of the bucket will remain on all other indexers until a local retention rule causes it to roll.

A) All replicated copies will be rolled to frozen; original copies will remain.

B) Replicated copies of the bucket will remain on all other indexers and the Cluster Master (CM) assigns a new primary bucket.

C) The bucket rolls to frozen on all clustered indexers simultaneously.

D) Nothing. Replicated copies of the bucket will remain on all other indexers until a local retention rule causes it to roll.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

50

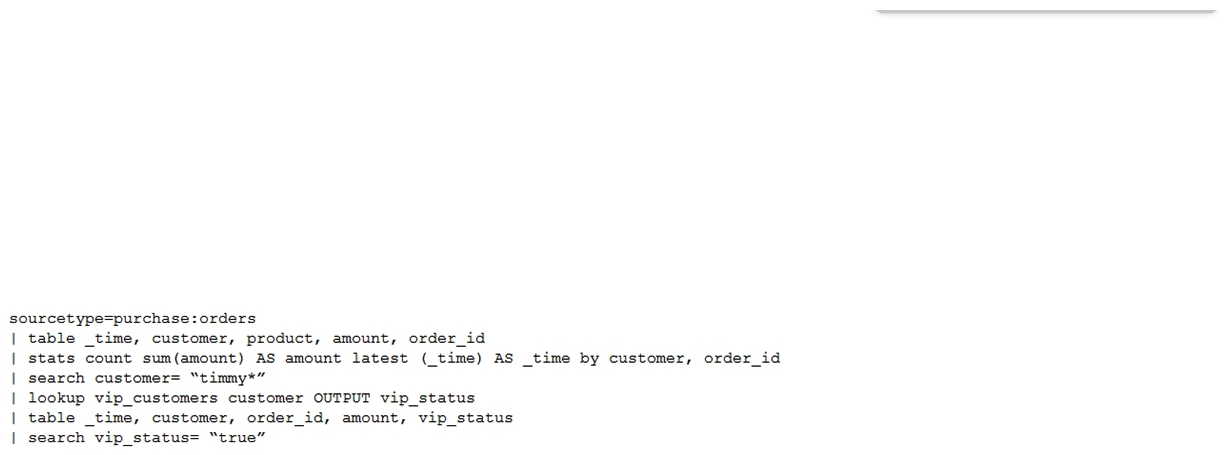

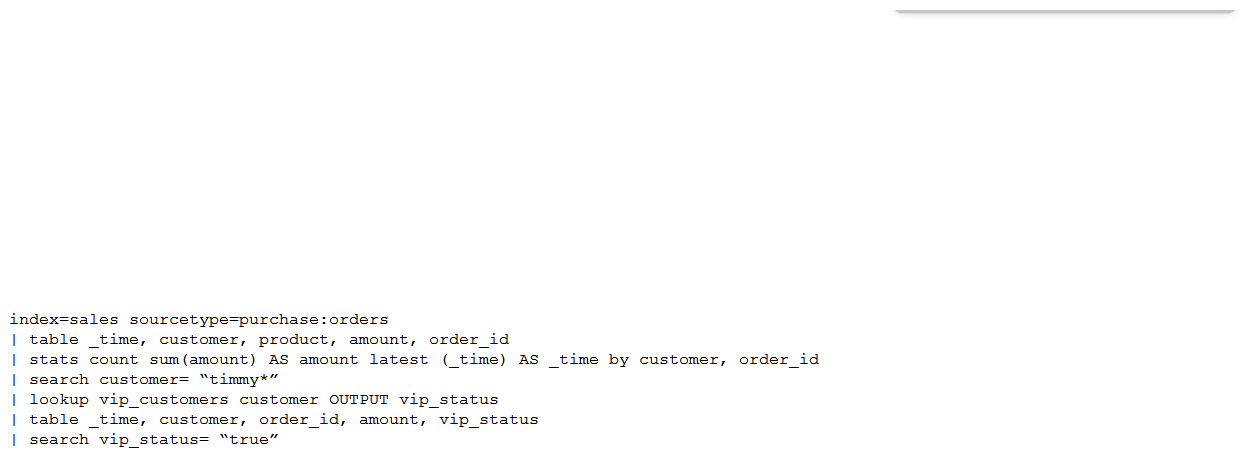

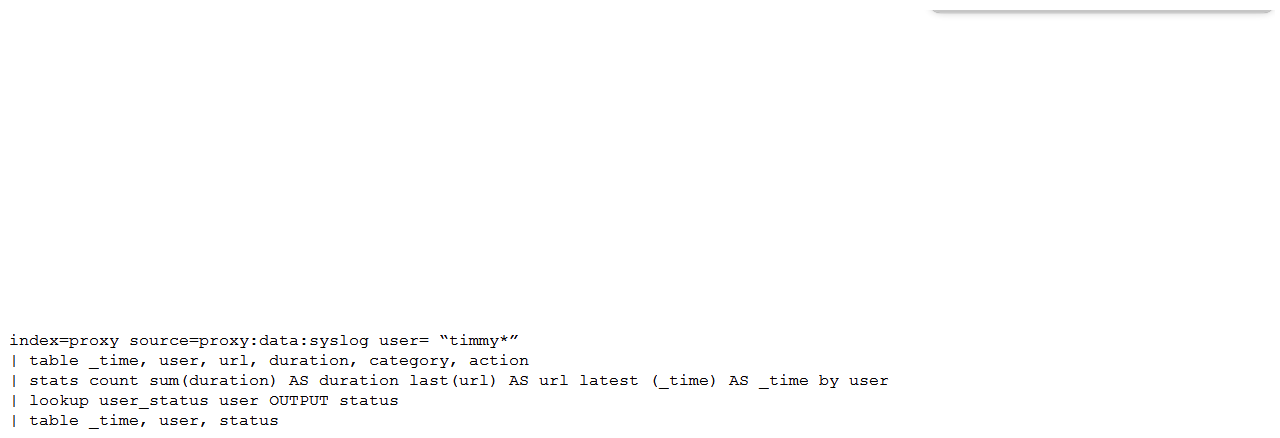

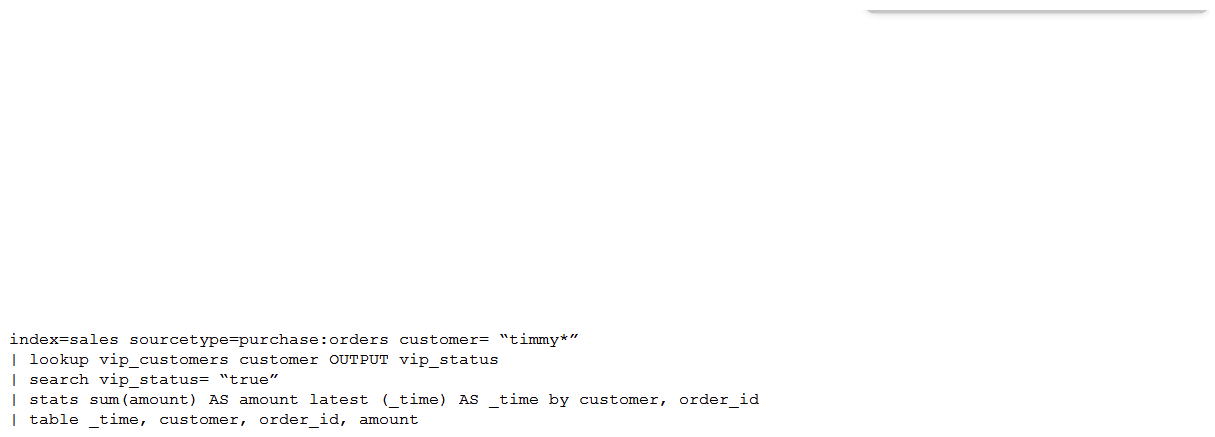

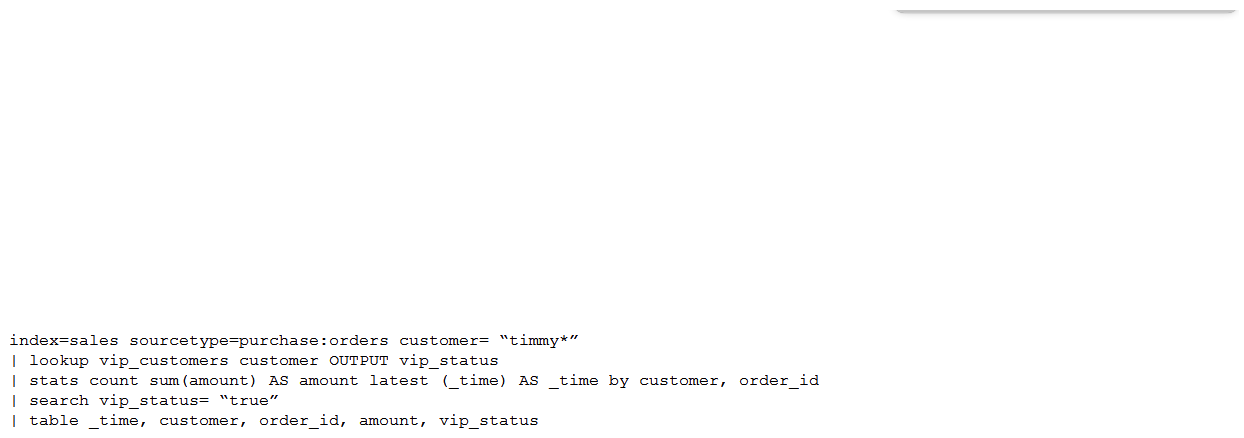

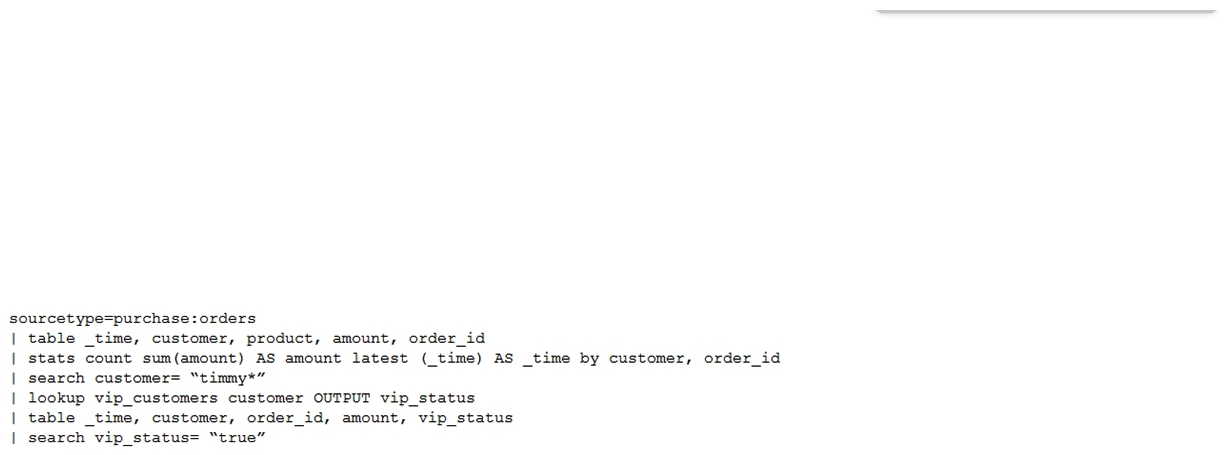

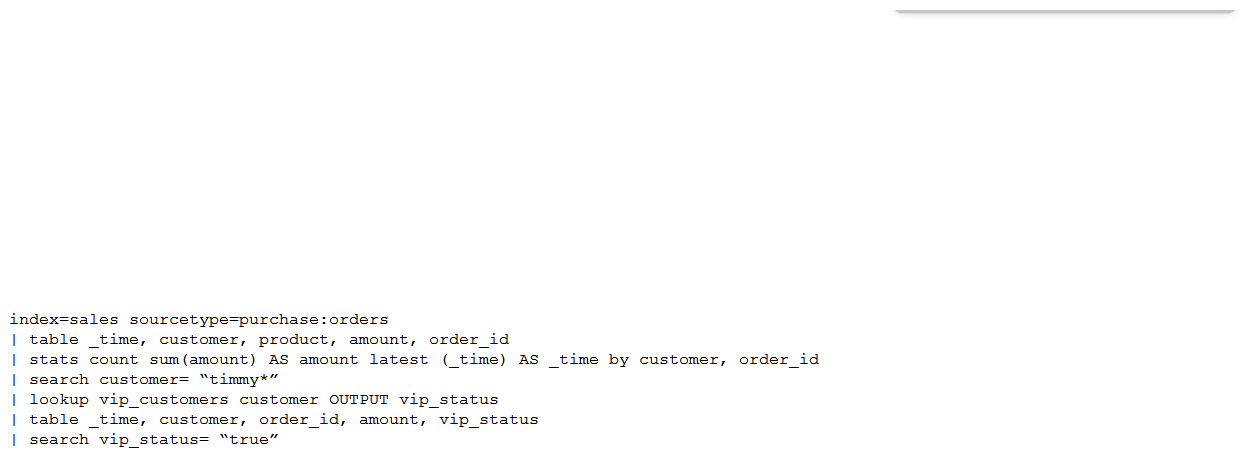

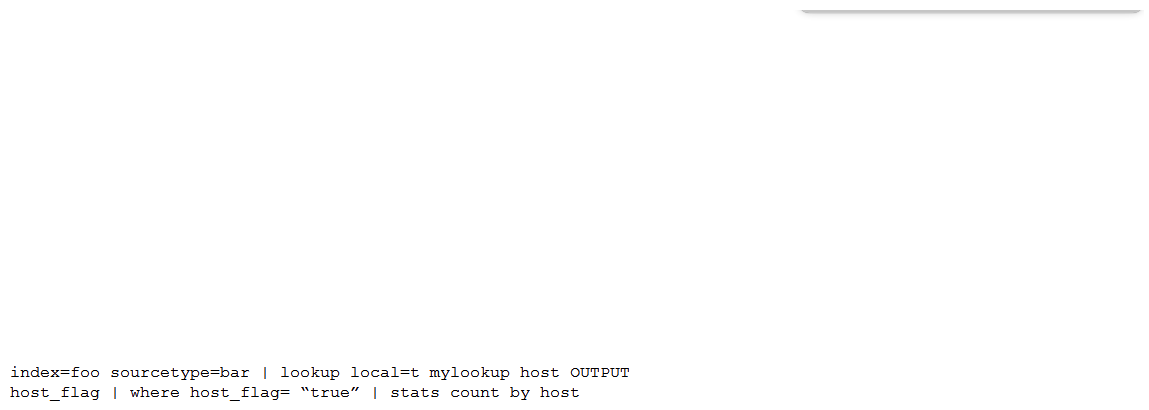

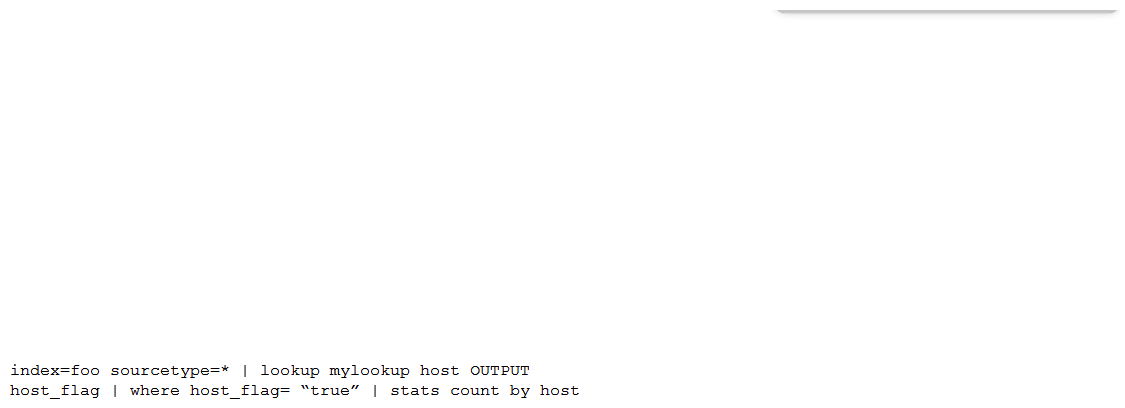

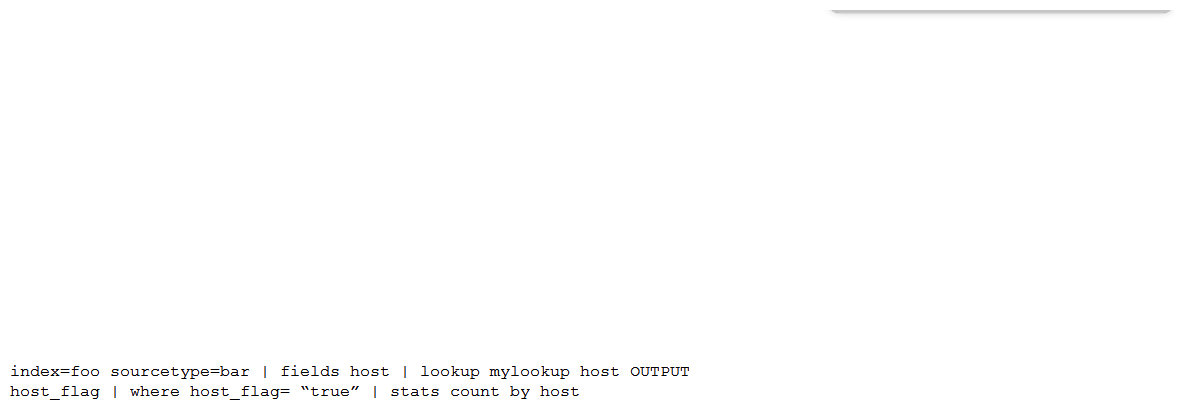

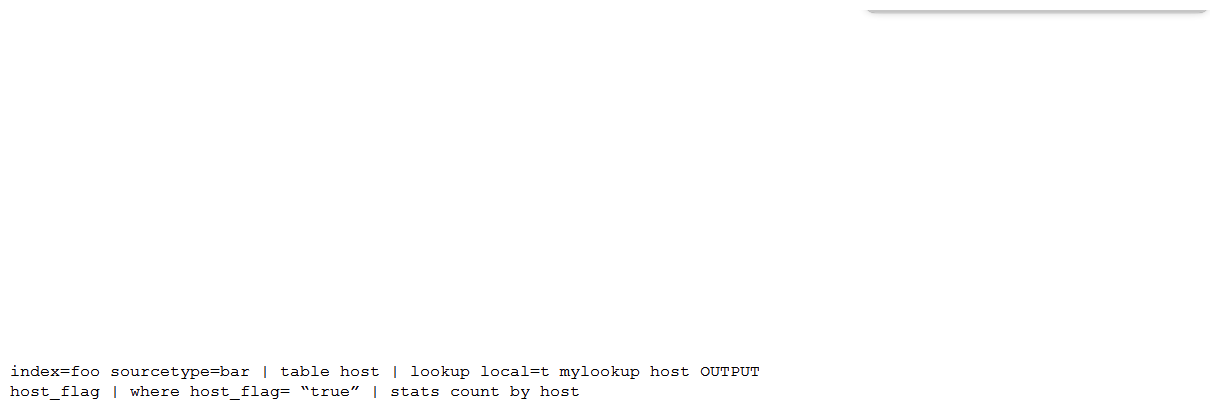

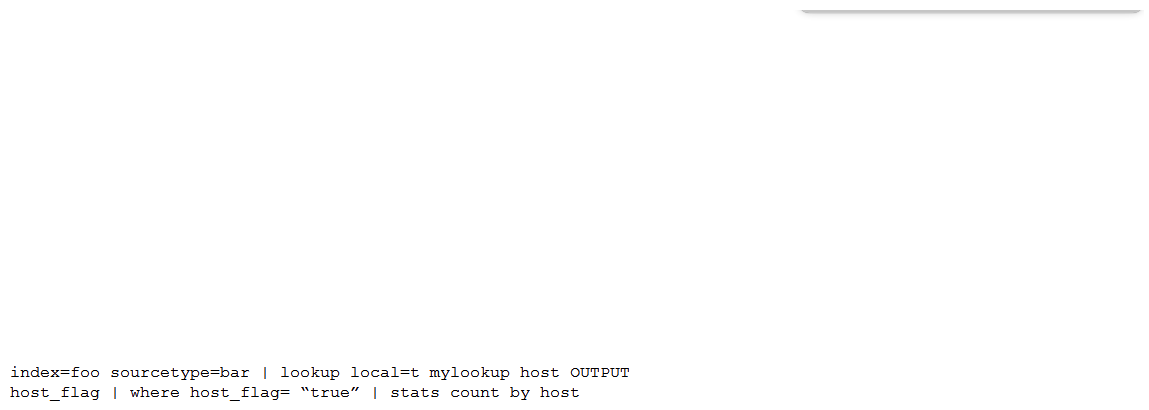

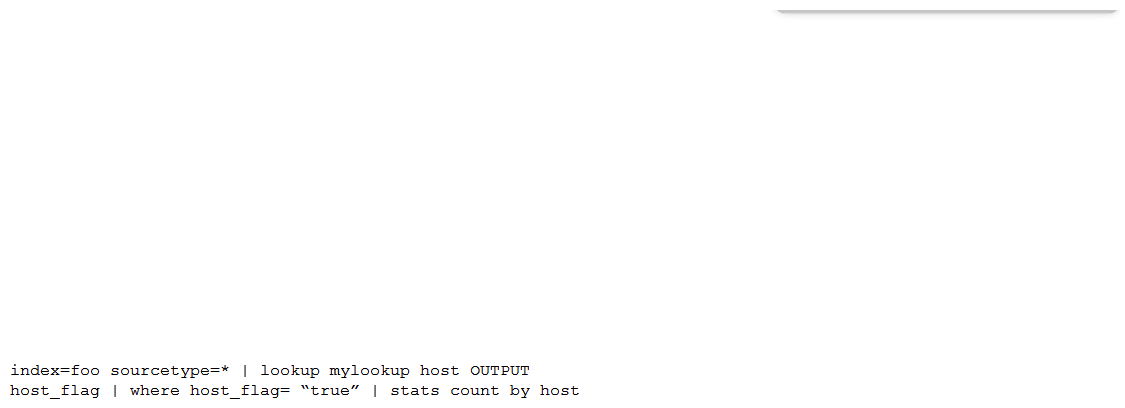

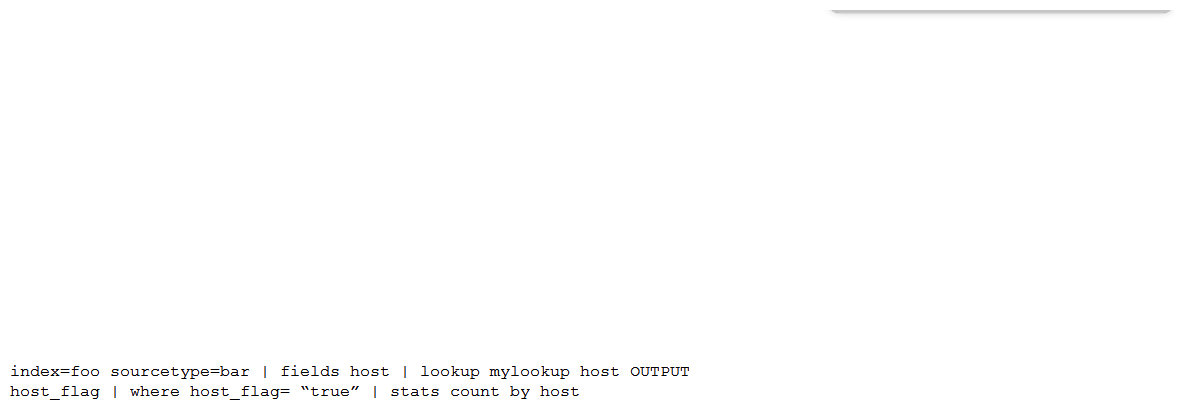

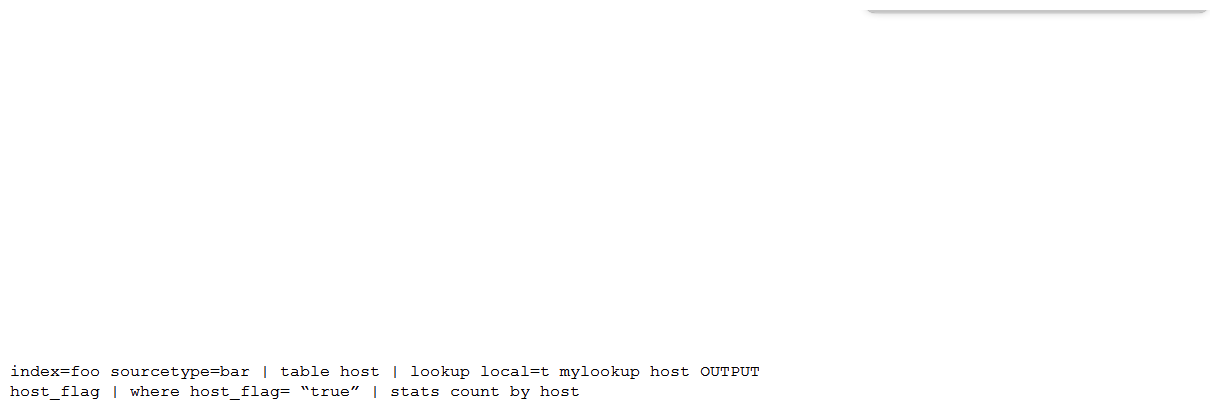

A customer has written the following search:  How can the search be rewritten to maximize efficiency?

How can the search be rewritten to maximize efficiency?

A)

B)

C)

D)

How can the search be rewritten to maximize efficiency?

How can the search be rewritten to maximize efficiency?A)

B)

C)

D)

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

51

A customer has a number of inefficient regex replacement transforms being applied. When under heavy load the indexers are struggling to maintain the expected indexing rate. In a worst case scenario, which queue(s) would be expected to fill up?

A) Typing, merging, parsing, input

B) Parsing

C) Typing

D) Indexing, typing, merging, parsing, input

A) Typing, merging, parsing, input

B) Parsing

C) Typing

D) Indexing, typing, merging, parsing, input

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

52

A non-ES customer has a concern about data availability during a disaster recovery event. Which of the following Splunk Validated Architectures (SVAs) would be recommended for that use case?

A) Topology Category Code: M4

B) Topology Category Code: M14

C) Topology Category Code: C13

D) Topology Category Code: C3

A) Topology Category Code: M4

B) Topology Category Code: M14

C) Topology Category Code: C13

D) Topology Category Code: C3

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

53

Which event processing pipeline contains the regex replacement processor that would be called upon to run event masking routines on events as they are ingested?

A) Merging pipeline

B) Indexing pipeline

C) Typing pipeline

D) Parsing pipeline

A) Merging pipeline

B) Indexing pipeline

C) Typing pipeline

D) Parsing pipeline

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

54

A customer has downloaded the Splunk App for AWS from Splunkbase and installed it in a search head cluster following the instructions using the deployer. A power user modifies a dashboard in the app on one of the search head cluster members. The app containing an updated dashboard is upgraded to the latest version by following the instructions via the deployer. What happens?

A) The updated dashboard will not be deployed globally to all users, due to the conflict with the power user's modified version of the dashboard.

B) Applying the search head cluster bundle will fail due to the conflict.

C) The updated dashboard will be available to the power user.

D) The updated dashboard will not be available to the power user; they will see their modified version.

A) The updated dashboard will not be deployed globally to all users, due to the conflict with the power user's modified version of the dashboard.

B) Applying the search head cluster bundle will fail due to the conflict.

C) The updated dashboard will be available to the power user.

D) The updated dashboard will not be available to the power user; they will see their modified version.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

55

An index receives approximately 50GB of data per day per indexer at an even and consistent rate. The customer would like to keep this data searchable for a minimum of 30 days. In addition, they have hourly scheduled searches that process a week's worth of data and are quite sensitive to search performance. Given ideal conditions (no restarts, nor drops/bursts in data volume), and following PS best practices, which of the following sets of indexes.conf settings can be leveraged to meet the requirements?

A) frozenTimePeriodInSecs, maxDataSize, maxVolumeDataSizeMB, maxHotBuckets

B) maxDataSize, maxTotalDataSizeMB, maxHotBuckets, maxGlobalDataSizeMB

C) maxDataSize, frozenTimePeriodInSecs, maxVolumeDataSizeMB

D) frozenTimePeriodInSecs, maxWarmDBCount, homePath.maxDataSizeMB, maxHotSpanSecs

A) frozenTimePeriodInSecs, maxDataSize, maxVolumeDataSizeMB, maxHotBuckets

B) maxDataSize, maxTotalDataSizeMB, maxHotBuckets, maxGlobalDataSizeMB

C) maxDataSize, frozenTimePeriodInSecs, maxVolumeDataSizeMB

D) frozenTimePeriodInSecs, maxWarmDBCount, homePath.maxDataSizeMB, maxHotSpanSecs

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

56

As a best practice which of the following should be used to ingest data on clustered indexers?

A) Monitoring (via a process), collecting data (modular inputs) from remote systems/applications

B) Modular inputs, HTTP Event Collector (HEC), inputs.conf monitor stanza Modular inputs, HTTP Event Collector (HEC), inputs.conf monitor stanza

C) Actively listening on ports, monitoring (via a process), collecting data from remote systems/applications

D) splunktcp , splunktcp-ssl , HTTP Event Collector (HEC) splunktcp , splunktcp-ssl , HTTP Event Collector (HEC)

A) Monitoring (via a process), collecting data (modular inputs) from remote systems/applications

B) Modular inputs, HTTP Event Collector (HEC), inputs.conf monitor stanza Modular inputs, HTTP Event Collector (HEC), inputs.conf monitor stanza

C) Actively listening on ports, monitoring (via a process), collecting data from remote systems/applications

D) splunktcp , splunktcp-ssl , HTTP Event Collector (HEC) splunktcp , splunktcp-ssl , HTTP Event Collector (HEC)

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

57

A customer has 30 indexers in an indexer cluster configuration and two search heads. They are working on writing SPL search for a particular use-case, but are concerned that it takes too long to run for short time durations. How can the Search Job Inspector capabilities be used to help validate and understand the customer concerns?

A) Search Job Inspector provides statistics to show how much time and the number of events each indexer has processed.

B) Search Job Inspector provides a Search Health Check capability that provides an optimized SPL query the customer should try instead.

C) Search Job Inspector cannot be used to help troubleshoot the slow performing search; customer should review index=_introspection instead. Search Job Inspector cannot be used to help troubleshoot the slow performing search; customer should review index=_introspection instead.

D) The customer is using the transaction SPL search command, which is known to be slow. The customer is using the transaction SPL search command, which is known to be slow.

A) Search Job Inspector provides statistics to show how much time and the number of events each indexer has processed.

B) Search Job Inspector provides a Search Health Check capability that provides an optimized SPL query the customer should try instead.

C) Search Job Inspector cannot be used to help troubleshoot the slow performing search; customer should review index=_introspection instead. Search Job Inspector cannot be used to help troubleshoot the slow performing search; customer should review index=_introspection instead.

D) The customer is using the transaction SPL search command, which is known to be slow. The customer is using the transaction SPL search command, which is known to be slow.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

58

When monitoring and forwarding events collected from a file containing unstructured textual events, what is the difference in the Splunk2Splunk payload traffic sent between a universal forwarder (UF) and indexer compared to the Splunk2Splunk payload sent between a heavy forwarder (HF) and the indexer layer? (Assume that the file is being monitored locally on the forwarder.)

A) The payload format sent from the UF versus the HF is exactly the same. The payload size is identical because they're both sending 64K chunks.

B) The UF sends a stream of data containing one set of medata fields to represent the entire stream, whereas the HF sends individual events, each with their own metadata fields attached, resulting in a lager payload.

C) The UF will generally send the payload in the same format, but only when the sourcetype is specified in the inputs.conf and EVENT_BREAKER_ENABLE is set to true . The UF will generally send the payload in the same format, but only when the sourcetype is specified in the inputs.conf and EVENT_BREAKER_ENABLE is set to true .

D) The HF sends a stream of 64K TCP chunks with one set of metadata fields attached to represent the entire stream, whereas the UF sends individual events, each with their own metadata fields attached.

A) The payload format sent from the UF versus the HF is exactly the same. The payload size is identical because they're both sending 64K chunks.

B) The UF sends a stream of data containing one set of medata fields to represent the entire stream, whereas the HF sends individual events, each with their own metadata fields attached, resulting in a lager payload.

C) The UF will generally send the payload in the same format, but only when the sourcetype is specified in the inputs.conf and EVENT_BREAKER_ENABLE is set to true . The UF will generally send the payload in the same format, but only when the sourcetype is specified in the inputs.conf and EVENT_BREAKER_ENABLE is set to true .

D) The HF sends a stream of 64K TCP chunks with one set of metadata fields attached to represent the entire stream, whereas the UF sends individual events, each with their own metadata fields attached.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

59

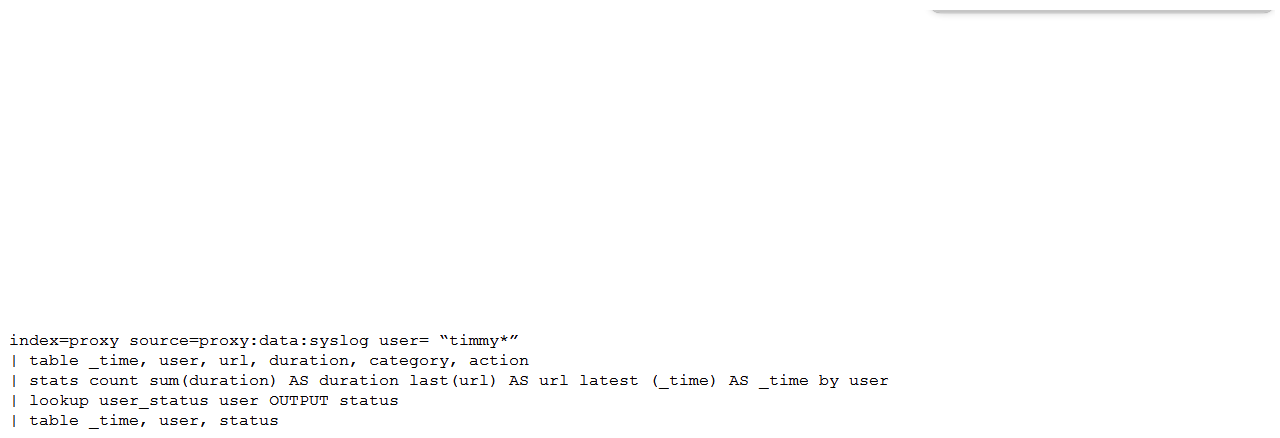

Which of the following is the most efficient search?

A)

B)

C)

D)

A)

B)

C)

D)

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

60

In an environment that has Indexer Clustering, the Monitoring Console (MC) provides dashboards to monitor environment health. As the environment grows over time and new indexers are added, which steps would ensure the MC is aware of the additional indexers?

A) No changes are necessary, the Monitoring Console has self-configuration capabilities.

B) Using the MC setup UI, review and apply the changes.

C) Remove and re-add the cluster master from the indexer clustering UI page to add new peers, then apply the changes under the MC setup UI.

D) Each new indexer needs to be added using the distributed search UI, then settings must be saved under the MC setup UI.

A) No changes are necessary, the Monitoring Console has self-configuration capabilities.

B) Using the MC setup UI, review and apply the changes.

C) Remove and re-add the cluster master from the indexer clustering UI page to add new peers, then apply the changes under the MC setup UI.

D) Each new indexer needs to be added using the distributed search UI, then settings must be saved under the MC setup UI.

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

61

As data enters the indexer, it proceeds through a pipeline where event processing occurs. In which pipeline does line breaking occur?

A) Indexing

B) Typing

C) Merging

D) Parsing

A) Indexing

B) Typing

C) Merging

D) Parsing

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck

62

A customer is using both internal Splunk authentication and LDAP for user management. If a username exists in both $SPLUNK_HOME/etc/passwd and LDAP, which of the following statements is accurate?

A) The internal Splunk authentication will take precedence.

B) Authentication will only succeed if the password is the same in both systems.

C) The LDAP user account will take precedence.

D) Splunk will error as it does not support overlapping usernames

A) The internal Splunk authentication will take precedence.

B) Authentication will only succeed if the password is the same in both systems.

C) The LDAP user account will take precedence.

D) Splunk will error as it does not support overlapping usernames

Unlock Deck

Unlock for access to all 62 flashcards in this deck.

Unlock Deck

k this deck