Deck 16: Markov Processes

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Question

Unlock Deck

Sign up to unlock the cards in this deck!

Unlock Deck

Unlock Deck

1/41

Play

Full screen (f)

Deck 16: Markov Processes

1

A unique matrix of transition probabilities should be developed for each customer.

False

2

A Markov chain cannot consist of all absorbing states.

False

3

A transition probability describes

A)the probability of a success in repeated, independent trials.

B)the probability a system in a particular state now will be in a specific state next period.

C)the probability of reaching an absorbing state.

D)None of the alternatives is correct.

A)the probability of a success in repeated, independent trials.

B)the probability a system in a particular state now will be in a specific state next period.

C)the probability of reaching an absorbing state.

D)None of the alternatives is correct.

B

4

All Markov chains have steady-state probabilities.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

5

The probability of reaching an absorbing state is given by the

A)R matrix.

B)NR matrix.

C)Q matrix.

D)(I - Q)-1matrix

A)R matrix.

B)NR matrix.

C)Q matrix.

D)(I - Q)-1matrix

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

6

All entries in a matrix of transition probabilities sum to 1.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

7

All Markov chain transition matrices have the same number of rows as columns.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

8

Steady state probabilities are independent of initial state.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

9

In Markov analysis, we are concerned with the probability that the

A)state is part of a system.

B)system is in a particular state at a given time.

C)time has reached a steady state.

D)transition will occur.

A)state is part of a system.

B)system is in a particular state at a given time.

C)time has reached a steady state.

D)transition will occur.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

10

If the probability of making a transition from a state is 0, then that state is called a(n)

A)steady state.

B)final state.

C)origin state.

D)absorbing state.

A)steady state.

B)final state.

C)origin state.

D)absorbing state.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

11

Absorbing state probabilities are the same as

A)steady state probabilities.

B)transition probabilities.

C)fundamental probabilities.

D)None of the alternatives is true.

A)steady state probabilities.

B)transition probabilities.

C)fundamental probabilities.

D)None of the alternatives is true.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

12

Markov processes use historical probabilities.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

13

The probability that a system is in a particular state after a large number of periods is

A)independent of the beginning state of the system.

B)dependent on the beginning state of the system.

C)equal to one half.

D)the same for every ending system.

A)independent of the beginning state of the system.

B)dependent on the beginning state of the system.

C)equal to one half.

D)the same for every ending system.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

14

For a situation with weekly dining at either an Italian or Mexican restaurant,

A)the weekly visit is the trial and the restaurant is the state.

B)the weekly visit is the state and the restaurant is the trial.

C)the weekly visit is the trend and the restaurant is the transition.

D)the weekly visit is the transition and the restaurant is the trend.

A)the weekly visit is the trial and the restaurant is the state.

B)the weekly visit is the state and the restaurant is the trial.

C)the weekly visit is the trend and the restaurant is the transition.

D)the weekly visit is the transition and the restaurant is the trend.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

15

The probability that the system is in state 2 in the 5th period is 5(2).

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

16

The probability of going from state 1 in period 2 to state 4 in period 3 is

A)p12

B)p23

C)p14

D)p43

A)p12

B)p23

C)p14

D)p43

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

17

If an absorbing state exists, then the probability that a unit will ultimately move into the absorbing state is given by the steady state probability.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

18

The fundamental matrix is used to calculate the probability of the process moving into each absorbing state.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

19

Analysis of a Markov process

A)describes future behavior of the system.

B)optimizes the system.

C)leads to higher order decision making.

D)All of the alternatives are true.

A)describes future behavior of the system.

B)optimizes the system.

C)leads to higher order decision making.

D)All of the alternatives are true.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

20

At steady state

A) 1(n+1) > 1(n)

B) 1 = 2

C) 1 + 2 1

D) 1(n+1) = 1

A) 1(n+1) > 1(n)

B) 1 = 2

C) 1 + 2 1

D) 1(n+1) = 1

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

21

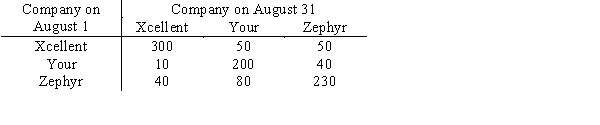

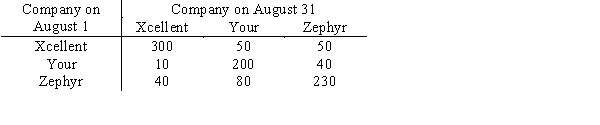

A city is served by three cable TV companies: Xcellent Cable, Your Cable, and Zephyr Cable. A survey of 1000 cable subscribers shows this breakdown of customers from the beginning to the end of August.

a.Construct the transition matrix.

b.What was each company's share of the market at the beginning and the end of the month?

c.If the current trend continues what will the market shares be?

a.Construct the transition matrix.

b.What was each company's share of the market at the beginning and the end of the month?

c.If the current trend continues what will the market shares be?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

22

The matrix of transition probabilities below deals with brand loyalty to Bark Bits and Canine Chow dog food.

a.What are the steady state probabilities?

b.What is the probability that a customer will switch brands on the next purchase after a large number of periods?

a.What are the steady state probabilities?

b.What is the probability that a customer will switch brands on the next purchase after a large number of periods?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

23

Bark Bits Company is planning an advertising campaign to raise the brand loyalty of its customers to .80.

a.The former transition matrix is

What is the new one?

b.What are the new steady state probabilities?

c.If each point of market share increases profit by $15000, what is the most you would pay for the advertising?

a.The former transition matrix is

What is the new one?

b.What are the new steady state probabilities?

c.If each point of market share increases profit by $15000, what is the most you would pay for the advertising?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

24

A television ratings company surveys 100 viewers on March 1 and April 1 to find what was being watched at 6:00 p.m. -- the local NBC affiliate's local news, the CBS affiliate's local news, or "Other" which includes all other channels and not watching TV. The results show

a.What are the numbers in each choice for April 1?

b.What is the transition matrix?

c.What ratings percentages do you predict for May 1?

a.What are the numbers in each choice for April 1?

b.What is the transition matrix?

c.What ratings percentages do you predict for May 1?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

25

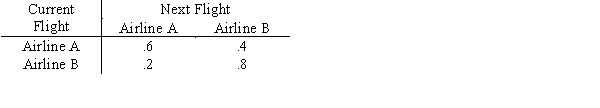

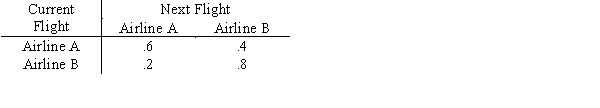

Two airlines offer conveniently scheduled flights to the airport nearest your corporate headquarters. Historically, flights have been scheduled as reflected in this transition matrix.

a.If your last flight was on B, what is the probability your next flight will be on A?

b.If your last flight was on B, what is the probability your second next flight will be on A?

c.What are the steady state probabilities?

a.If your last flight was on B, what is the probability your next flight will be on A?

b.If your last flight was on B, what is the probability your second next flight will be on A?

c.What are the steady state probabilities?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

26

Explain the concept of memorylessness.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

27

A state i is a transient state if there exists a state j that is reachable from i, but the state i is not reachable from state j.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

28

The daily price of a farm commodity is up, down, or unchanged from the day before. Analysts predict that if the last price was down, there is a .5 probability the next will be down, and a .4 probability the price will be unchanged. If the last price was unchanged, there is a .35 probability it will be down and a .35 probability it will be up. For prices whose last movement was up, the probabilities of down, unchanged, and up are .1, .3, and .6.

a.Construct the matrix of transition probabilities.

b.Calculate the steady state probabilities.

a.Construct the matrix of transition probabilities.

b.Calculate the steady state probabilities.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

29

Why is a computer necessary for some Markov analyses?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

30

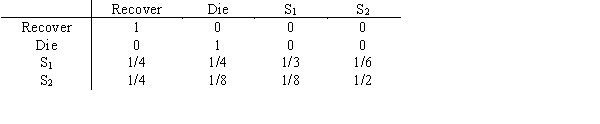

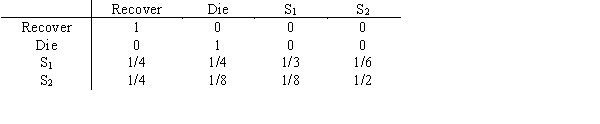

The medical prognosis for a patient with a certain disease is to recover, to die, to exhibit symptom 1, or to exhibit symptom 2. The matrix of transition probabilities is

a.What are the absorbing states?

b.What is the probability that a patient with symptom 2 will recover?

a.What are the absorbing states?

b.What is the probability that a patient with symptom 2 will recover?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

31

Where is a fundamental matrix, N, used? How is N computed?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

32

A state i is an absorbing state if pii = 0.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

33

All entries in a row of a matrix of transition probabilities sum to 1.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

34

Appointments in a medical office are scheduled every 15 minutes. Throughout the day, appointments will be running on time or late, depending on the previous appointment only, according to the following matrix of transition probabilities:

a.The day begins with the first appointment on time.What are the state probabilities for periods 1, 2, 3 and 4?

b.What are the steady state probabilities?

a.The day begins with the first appointment on time.What are the state probabilities for periods 1, 2, 3 and 4?

b.What are the steady state probabilities?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

35

What assumptions are necessary for a Markov process to have stationary transition probabilities?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

36

For Markov processes having the memoryless property, the prior states of the system must be considered in order to predict the future behavior of the system.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

37

Calculate the steady state probabilities for this transition matrix.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

38

Accounts receivable have been grouped into the following states:

State 1:

Paid

State 2:

Bad debt

State 3:

0-30 days old

State 4:

31-60 days old

Sixty percent of all new bills are paid before they are 30 days old. The remainder of these go to state 4. Seventy percent of all 30 day old bills are paid before they become 60 days old. If not paid, they are permanently classified as bad debts.

a.Set up the one month Markov transition matrix.

b.What is the probability that an account in state 3 will be paid?

State 1:

Paid

State 2:

Bad debt

State 3:

0-30 days old

State 4:

31-60 days old

Sixty percent of all new bills are paid before they are 30 days old. The remainder of these go to state 4. Seventy percent of all 30 day old bills are paid before they become 60 days old. If not paid, they are permanently classified as bad debts.

a.Set up the one month Markov transition matrix.

b.What is the probability that an account in state 3 will be paid?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

39

When absorbing states are present, each row of the transition matrix corresponding to an absorbing state will have a single 1 and all other probabilities will be 0.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

40

Give two examples of how Markov analysis can aid decision making.

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck

41

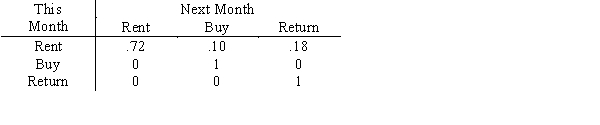

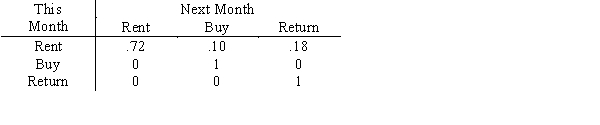

Rent-To-Keep rents household furnishings by the month. At the end of a rental month a customer can: a) rent the item for another month, b) buy the item, or c) return the item. The matrix below describes the month-to-month transition probabilities for 32-inch stereo televisions the shop stocks.  What is the probability that a customer who rented a TV this month will eventually buy it?

What is the probability that a customer who rented a TV this month will eventually buy it?

What is the probability that a customer who rented a TV this month will eventually buy it?

What is the probability that a customer who rented a TV this month will eventually buy it?

Unlock Deck

Unlock for access to all 41 flashcards in this deck.

Unlock Deck

k this deck